In today’s rapidly evolving world of software development, efficient and scalable application deployment is crucial for businesses to remain competitive. Docker has emerged as a game-changing technology, simplifying the process of packaging, distributing, and deploying applications across diverse environments. In this comprehensive article, we will delve into Docker, exploring its fundamental concepts, workflow, benefits, best practices, and practical examples to help you harness the power of containerization.

What is Docker?

Docker is an open-source platform that enables developers to automate the deployment and management of applications using containers. Containers are lightweight, isolated environments that package applications and their dependencies, ensuring consistent behavior across different systems and eliminating the notorious “it works on my machine” problem. By encapsulating applications in containers, Docker provides a portable and predictable deployment model. To dive deeper into Docker’s capabilities, you can refer to the official Docker Wiki.

How Does Docker Work?

Docker follows a client-server architecture, where the Docker client interacts with the Docker daemon, responsible for managing containers. Let’s explore the key components and workflow of Docker:

Docker Images

Docker images are the building blocks of containerized applications. An image is a lightweight, standalone, and executable package that contains everything needed to run an application, including the code, runtime, system tools, libraries, and configurations. Docker images are created using a declarative text file called a Dockerfile. The Dockerfile specifies the instructions to build the image. These instructions include pulling base images, adding application code, setting up dependencies, and configuring the runtime environment. By following best practices in creating efficient and secure Dockerfiles, you can optimize the image creation process.

Here is a small example of how a Dockerfile could look like:

# Use a base image with Node.js pre-installed

FROM node:14-alpine

# Set the working directory inside the container

WORKDIR /app

# Copy package.json and package-lock.json to the container

COPY package*.json ./

# Install dependencies

RUN npm install

# Copy the application code to the container

COPY . .

# Expose a port for the application to listen on

EXPOSE 3000

# Define the command to run the application when the container starts

CMD ["node", "app.js"]Containers

Containers are the running instances of Docker images. They provide an isolated environment where applications can run without interfering with other processes or applications on the host system. Containers leverage the host system’s kernel, making them lightweight and efficient. With Docker, you can easily manage containers, including starting, stopping, pausing, and removing them. Docker offers a command-line interface (CLI) and APIs for container management.

To run a container from an image, you can use the following example command:

docker run -d -p 8080:80 nginxIn this command, we run a container based on the NGINX image, exposing port 8080 on the host system to access the NGINX web server running inside the container.

If you want to learn more about Docker Volumes, Containers and Images and how do remove or manage them, check out this blogpost.

Benefits of Using Docker

Docker offers several benefits that revolutionize the way applications are developed, deployed, and managed:

Portability

Docker ensures portability by packaging applications and their dependencies into containers. With containers, you can achieve consistent behavior across different environments, eliminating the “it works on my machine” dilemma. Containers encapsulate the application and its dependencies, making it easy to deploy and run consistently on different machines, operating systems, and cloud platforms.

Scalability

Docker simplifies application scaling through orchestration tools like Docker Swarm and Kubernetes. These tools help manage a cluster of containers, enabling easy scaling, load balancing, and fault tolerance. With Docker Swarm, you can create a swarm of Docker nodes that work together as a single virtual system. Kubernetes, on the other hand, provides a powerful platform for container orchestration, automating deployment, scaling, and management of containerized applications across clusters of hosts.

Rapid Deployment

Docker streamlines the deployment process, allowing applications to be quickly deployed on any host system with Docker installed. Once an image is built, it can be easily distributed and deployed, reducing the time and effort required for setup and configuration. Docker simplifies the management of application dependencies, ensuring that the required libraries, frameworks, and tools are available within the container.

To build and deploy a Docker image, you can use the following example commands:

docker build -t myapp .

docker run -d myappIn these commands, we build a Docker image tagged as “myapp” based on the Dockerfile in the current directory. Then, we run a container from the “myapp” image in detached mode (-d), allowing the container to run in the background.

Resource Efficiency

Containers are lightweight and share the host system’s kernel, resulting in optimized resource usage compared to traditional virtual machines. By leveraging containerization, you can achieve higher server density, reducing infrastructure costs and maximizing resource utilization. Docker’s efficient resource allocation enables you to run multiple containers on a single host without sacrificing performance.

Dependency Management

Docker simplifies application deployment by isolating dependencies within containers. Developers can specify the required versions of libraries, frameworks, and tools, ensuring consistency and avoiding conflicts with other applications on the host system. By encapsulating dependencies within containers, Docker eliminates the need for complex setup and configuration, providing a self-contained environment for applications.

Docker Ecosystem

Docker has spawned a vibrant ecosystem of tools and services that enhance its functionalities and provide additional capabilities for managing containerized applications. Some notable components of the Docker ecosystem include:

Docker Swarm

Docker Swarm is a native clustering and orchestration solution for Docker. It enables you to create a swarm of Docker nodes that work together as a single virtual system. Swarm provides features such as service discovery, load balancing, rolling updates, and fault tolerance, making it easier to manage and scale containerized applications. You can learn more about Docker Swarm from the official Docker Swarm documentation.

Kubernetes

Kubernetes is an open-source container orchestration platform that automates the deployment, scaling, and management of containerized applications. It provides advanced features for service discovery, load balancing, self-healing, and scaling based on resource utilization. Kubernetes has become the de facto standard for managing containers at scale. To learn more about Kubernetes, you can refer to the official Kubernetes documentation.

Additional Tools and Services

The Docker ecosystem includes a wide range of tools and services that complement Docker’s capabilities. Docker Compose allows you to define and manage multi-container applications using a YAML-based configuration file. Meanwhile Docker Registry provides a centralized repository for storing and distributing Docker images, allowing you to host private images securely. Docker Machine simplifies the process of provisioning and managing Docker hosts on various platforms. These additional tools and services expand the functionality and flexibility of Docker for various deployment scenarios.

Exploring the Docker ecosystem and integrating the right tools and services into your workflows can further enhance the power and efficiency of containerized application deployment.

Best Practices for Docker

To make the most of Docker and ensure smooth application deployment, consider the following best practices:

- Optimize Dockerfile creation: Follow best practices in creating efficient and secure Dockerfiles. Minimize the number of layers, use multi-stage builds where appropriate, and leverage caching mechanisms to speed up image builds.

- Image size optimization: Keep Docker image sizes as small as possible by removing unnecessary files and dependencies. Smaller images result in faster image pulls and improved resource utilization.

- Secure container environments: Implement security measures such as scanning images for vulnerabilities, using secure base images, and following container security best practices to protect your applications and data.

- Leverage networking features: Familiarize yourself with Docker’s networking capabilities, such as creating networks, linking containers, and exposing ports. Effectively utilize networking features to enable communication between containers and external systems.

- Container orchestration: Explore container orchestration platforms like Docker Swarm or Kubernetes to simplify the management, scaling, and monitoring of containerized applications. These platforms provide advanced features for high availability, load balancing, and automatic scaling.

- Monitoring and logging: Implement proper monitoring and logging solutions to gain insights into container performance, resource usage, and application behavior. Tools like Docker Stats, Prometheus, and ELK Stack can help in monitoring and analyzing containerized environments.

Use Cases and Examples

Docker finds applications across various industries and use cases. Here are a few examples:

Microservice Architecture

Docker is widely adopted in building microservice-based architectures. By containerizing individual services, developers can easily manage and deploy independent components, facilitating scalability, versioning, and maintainability.

Continuous Integration and Deployment (CI/CD)

Docker plays a vital role in modern CI/CD pipelines. By encapsulating applications and their dependencies within containers, developers can ensure consistent environments for testing, building, and deploying applications across different stages of the development lifecycle.

Hybrid Cloud and Multi-Cloud Deployments

Docker’s portability allows organizations to deploy applications seamlessly across hybrid cloud environments or multiple cloud providers. Docker’s compatibility with different platforms and infrastructure providers simplifies the management of applications running in diverse cloud setups.

How to start with Docker

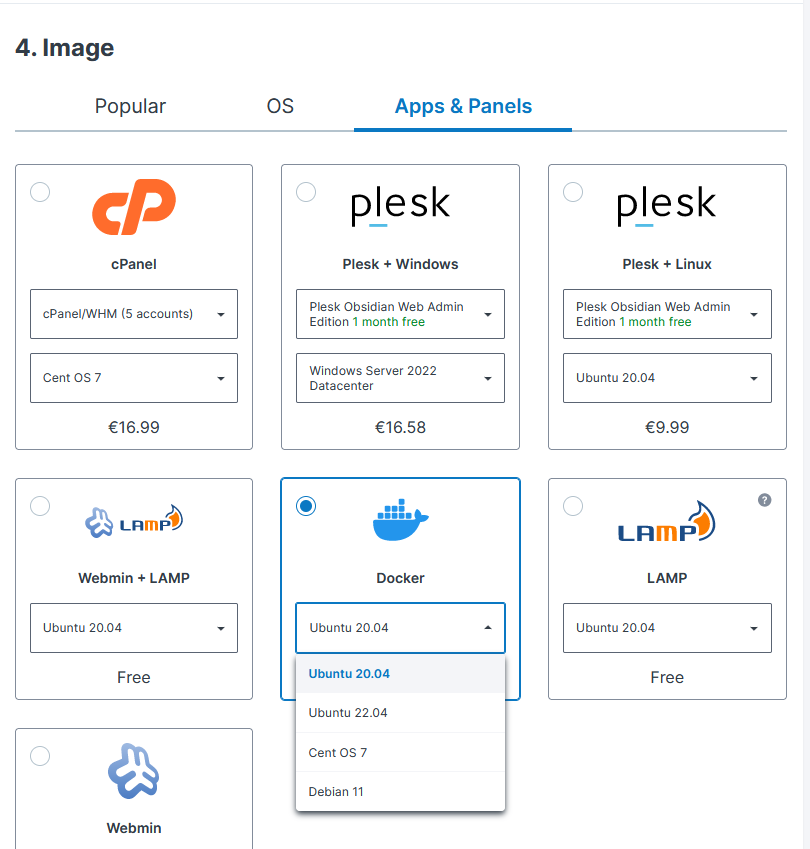

If you’re eager to explore the world of Docker and its potential to revolutionize your development and deployment processes, Contabo offers an excellent solution to kickstart your journey. By opting for Contabo’s Docker-ready VPS, you can save time and effort with Docker pre-installed, ensuring a seamless setup process.

To get started, simply select the Docker-preinstalled option during the ordering process, and you’ll be ready to deploy and manage containers in no time:

However, if you already have a Contabo VPS or prefer to install Docker yourself, don’t worry. Here’s a quick guide to help you install Docker and Docker Compose on your Linux system:

Installation of Docker and Docker Compose

Install Docker

sudo apt update

sudo apt install docker.ioStart Docker Service:

sudo systemctl start docker

sudo systemctl enable dockerInstall Docker Compose

sudo curl -L "https://github.com/docker/compose/releases/latest/download/docker-compose-$(uname -s)-$(uname -m)" -o /usr/local/bin/docker-compose

sudo chmod +x /usr/local/bin/docker-composeSo, whether you opt for Contabo’s Docker-ready VPS or choose to install Docker yourself, you’re just a few commands away from embracing the power and efficiency of Docker.

Conclusion

Docker has revolutionized the way applications are built, shipped, and run. By leveraging containerization, Docker provides a consistent and portable environment, enabling streamlined application deployment, scalability, and resource efficiency. Understanding Docker’s concepts, benefits, and best practices empowers developers and operations teams to embrace this powerful technology and enhance their software development workflows.

Whether you’re a developer, system administrator, or DevOps practitioner, diving into the Docker ecosystem opens up a world of possibilities for efficient application deployment and management. So, take a deep dive into Docker, explore its features, experiment with Dockerfiles, and unleash the potential of containerization in your projects. With Docker, you can simplify the deployment process, improve scalability, enhance resource utilization, and drive innovation in your software development endeavors.