DeepSeek AI represents a major step forward in open-source language models, delivering strong reasoning capabilities without specialized GPU hardware. When combined with Ollama, a lightweight model management tool, it provides a practical solution for running AI models locally with better privacy and performance control.

Why Self-Host DeepSeek?

Running DeepSeek on your own Contabo server gives you clear privacy and security benefits. This matters even more after security researchers recently identified an unprotected DeepSeek database that exposed sensitive information including chat histories, API keys, and backend details. Self-hosting keeps all information within your controlled infrastructure, preventing such vulnerabilities while also ensuring GDPR and HIPAA compliance.

Revolutionary CPU-Based Performance

DeepSeek models can run efficiently also on CPU-based systems. This removes the need for expensive GPU hardware, making advanced AI available to more users for their purposes. Whether you’re using the 1.5b or 14b model, DeepSeek claims to work on standard server configurations, including virtual private servers.

Local Control and Flexibility for Testing Purposes

Self-hosting gives you full API access and complete control over model settings. This setup enables running tests and sandboxes for custom implementations, better security measures, and smooth integration with existing systems. The open-source nature of DeepSeek provides transparency and allows community-driven improvements, making it an excellent choice for organizations that value both performance and privacy.

Step-by-Step Guide: Installing DeepSeek on Contabo

Disclaimer: We recommend our VDS M-XXL models, which provide the necessary resources for optimal performance. Plans with lower specifications might be usable, but will definitely affect the LLM performance.

Exclusive: Contabo’s Pre-configured DeepSeek Image

Contabo leads the industry as the first and only provider offering a ready-made image with DeepSeek out of the box. This solution eliminates complex setup procedures and gets you started within minutes. Our pre-configured image ensures optimal performance settings and immediate deployment capabilities.

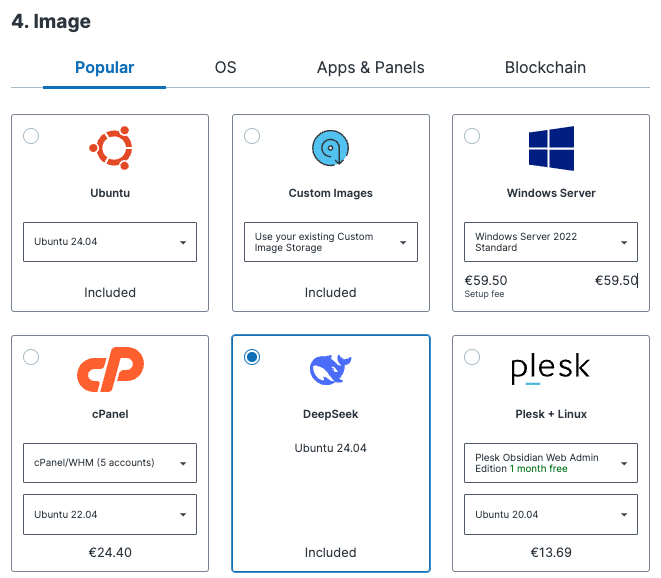

o select the pre-configured DeepSeek image during server setup:

- Proceed to step 4 “Image” in the Product Configurator

- Select the DeepSeek image from the available options in the Popular tab

- Continue with your server configuration as desired for your testing purposes

This pre-configured image ensures immediate deployment capabilities and gives you space to continue further testing the model performance optimization. Once your server is provisioned, you can start using DeepSeek right away without additional installation steps.

Manual Installation

For users who prefer a custom setup, the manual installation process is straightforward but requires several steps. Let’s break it down:

Before installing DeepSeek on your Contabo server, ensure you have Ubuntu 22.04 LTS installed.

First, make sure your system is up to date:

sudo apt update && sudo apt upgrade -yNext, install Ollama, the lightweight model management tool that will handle your DeepSeek deployment:

curl -fsSL https://ollama.com/install.sh | shAfter installation, start the Ollama service to prepare for model deployment:

sudo systemctl start ollamaNow you’re ready to pull your chosen DeepSeek model. The 14b version offers an excellent balance of performance and resource usage:

ollama pull deepseek-r1:14b Model Deployment

Once installation is complete, you can start using DeepSeek. Run the model directly for interactive use:

ollama run deepseek-r1:14bFor API access, which enables integration with your applications:

ollama serveVerification Steps

Verify your installation with these simple checks:

# Check model status

ollama list

# Run a simple test

ollama run deepseek-r1:14b "Hello, please verify if you're working correctly." Model Compatibility and Requirements

Model Size Overview

DeepSeek models come in various sizes to suit different needs:

| Model Version | Size | RAM Required | Recommended Plan | Capabilities |

| deepseek-r1:1.5b | 1.1 GB | 4 GB | VDS M | Basic tasks, can run on standard laptops, suitable for testing and prototyping |

| deepseek-r1:7b | 4.7 GB | 10 GB | VDS M | Text processing, coding tasks, translation work |

| deepseek-r1:14b | 9 GB | 20 GB | VDS L | Strong performance in coding, translation, and writing tasks |

| deepseek-r1:32b | 19 GB | 40 GB | VDS XL | Matches or exceeds OpenAI’s o1 mini performance, advanced analytics |

| deepseek-r1:70b | 42 GB | 85 GB | VDS XXL | Enterprise-level tasks, advanced reasoning, complex computations |

Resource Allocation Guidelines

Memory management is key for optimal performance. Always allocate twice the model size in RAM for smooth operation. For example, the 14b model (9 GB) runs best with at least 20 GB RAM to handle both model loading and processing tasks. In regards to storage: choose NVMe drives for faster model loading.

Why Choose Contabo to Host DeepSeek for Testing Purposes

Contabo’s servers provide high-performance NVMe storage, substantial monthly traffic allowance (32 TB), and competitive pricing without hidden fees. With data centers across 12 locations in 9 regions spanning 4 continents, including multiple sites in Europe, the United States (New York, Seattle, St. Louis), Asia (Singapore, Tokyo), Australia (Sydney), and India, Contabo offers global reach for your AI deployments. The combination of reliable hardware and cost-effective plans makes it an ideal choice for self-hosted AI deployments.

Final Thoughts

The combination of DeepSeek’s efficient models and proper server infrastructure opens new possibilities for organizations valuing data privacy and performance. Stay connected with us as we continue to evolve in this space – this pre-configured image is just the beginning of our commitment to making advanced AI technology more accessible and manageable.