Have you ever needed to get data from a website that doesn’t have an API? Maybe you want to track your competitors’ prices, gather leads from a business directory, or pull article content for analysis. Manually copying and pasting this information is slow, tedious, and prone to error. This is where n8n web scraping comes in. By using n8n, you can build powerful, automated workflows to extract the exact data you need from any website, saving you countless hours of manual work.

This guide will show you how to scrape with n8n, starting with the absolute basics and moving into more advanced techniques. We’ll cover everything from simple n8n HTML scraping of static pages to handling dynamic, JavaScript-heavy sites. By the end, you’ll have the skills to turn any website into a structured data source and build a complete n8n data scraping pipeline.

The Basics of Web Scraping with n8n: An Introduction to Core Nodes

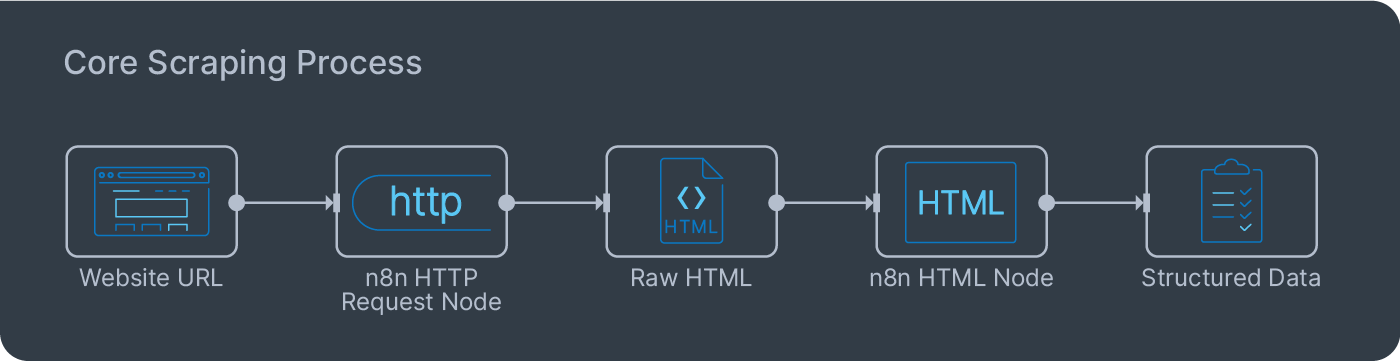

At its heart, all n8n web scraping follows a simple, two-step process:

- Fetch: You make a request to a website’s server, just like a web browser does, and get back the raw HTML code of the page.

- Parse: You sift through that HTML code to find and extract the specific pieces of information you care about, like a product name, price, or article title.

n8n provides two fundamental, existing nodes that handle this process perfectly. It’s important to understand what each one does.

The first is the n8n HTTP request node. This is your tool for fetching the webpage. You give it a URL, and it returns the complete HTML source code for that page. It doesn’t render the page or execute any JavaScript; it just grabs the raw code, which is exactly what we need for the most common and efficient type of scraping.

The second is the HTML node. This is your parsing tool. You feed it the raw HTML from the previous step, and it allows you to use CSS selectors – the same selectors used to style web pages – to pinpoint and extract specific data. This two-step approach is incredibly flexible, as it separates the act of fetching from the act of parsing, making your workflows easier to build and debug.

Simple Scraping: How to Extract HTML Content with the HTTP Request Node

Let’s walk through a practical example of how to scrape with n8n. Our goal will be to perform a simple n8n data scraping task: extracting the titles of the latest blog posts from a website.

Step 1: Fetch the Page with the HTTP Request Node

First, add an n8n HTTP Request node to your workflow.

- In the URL field, enter the full URL of the page you want to scrape.

- Leave the Method as

GET. - Execute the node (see below if you get a 403 Forbidden error).

Step 2: Parse the Titles with the HTML Node

Once you have the HTML, connect an HTML node to the output of the HTTP Request node. This is where we’ll perform the n8n HTML scraping.

- Set the Operation to “Extract HTML Content”.

- In the Extraction Values table, we need to tell the node what to look for.

- For the Key, type

title. This is just a label for the data we’re extracting. - For the CSS Selector, enter the specific selector that targets the element you want. You can find this by right-clicking the element in your browser and choosing “Inspect.” In the developer panel that opens, right-click on the highlighted HTML element and choose Copy > Copy selector. This will copy the precise CSS selector to your clipboard, which you can then paste directly into the n8n HTML node.

- For the Return Value, select “Text”.

- For the Key, type

- Execute the node. The output will be a clean list of the text content you extracted.

Troubleshooting a 403 Forbidden Error

If you immediately get a 403 Forbidden error in Step 1, don’t worry. This is very common. It means the website’s server has identified your request as coming from an automated script (which it is) and has blocked it. The most common reason for this is a missing User-Agent.

A User-Agent is a string that tells the server what kind of browser is making the request. By default, n8n’s HTTP Request node sends a technical one, which is an easy giveaway.

How to Fix It (First Step): You need to make your request look like it’s coming from a real web browser.

- In the HTTP Request node, find the Headers section.

- Click Add Header.

- In the Name field, type

User-Agent. - In the Value field, paste a common browser User-Agent string. A safe and standard one to use is:

Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/108.0.0.0 Safari/537.36 - Execute the node again. In most cases, adding this header should resolve the 403 error and allow you to fetch the page’s HTML successfully.

If the User-Agent fix doesn’t work, another common reason for being blocked is your server’s IP address. You can configure the HTTP Request node to use a proxy server by adding a Proxy option to the node. This routes your request through a different IP address, which can often bypass simple IP-based blocks.

What If That Doesn’t Work? (Next Step):

If you still get a 403 error, the website is likely using a more advanced bot detection service (like Cloudflare) that is blocking requests from known data center IP addresses, including n8n’s cloud servers. The User-Agent is correct, but the source of the request is being flagged.

In this scenario, your best option is to move on to the “Advanced Scraping” techniques discussed later in this article. You will need to use a residential proxy or a specialized scraping API service that routes your request through a real browser on a residential IP address, making it indistinguishable from a normal user.

Cleaning and Formatting Your Scraped Data

Often, the data you extract isn’t perfectly clean. It might have extra whitespace, unwanted characters, or need to be converted to a different format. You can handle this directly in n8n. To do simple cleaning, you can use expressions. For example, to remove leading/trailing whitespace from a title, you could use {{ $json.title.trim() }}.

For more complex transformations, the Code node is your best friend. This node lets you write a small snippet of JavaScript to process your data. You could use it to remove currency symbols from a price, convert a date format, or split a full name into a first and last name. Performing this cleaning step ensures the data you save later is uniform and ready for analysis.

n8n Advanced Scraping: Handling Dynamic Pages with the Ultimate Scraper Workflow

The simple HTTP Request method is fast and efficient, but it hits a wall with modern websites. If a site relies on JavaScript to load content, or if it’s protected by services like Cloudflare, you’ll often get an error or an empty page. For these situations, you need a more powerful approach for n8n advanced scraping.

While n8n has no native n8n Selenium node to control a browser directly, the community has built powerful nodes that integrate dedicated scraping services. These services handle all the complex backend work – like running real browsers, rotating proxies, and solving CAPTCHAs – and give you a simple node to use in your workflow. This is often the best and easiest way to handle tough scraping jobs.

The Best Way: Using a Dedicated Scraping Community Node

For most users, the most effective way to scrape a dynamic or protected website is to use a community node that integrates with a service like ScrapeNinja or Firecrawl. These are not just simple browser services, they’re specialized scraping APIs designed to bypass anti-bot measures.

Here’s how you would approach it using a node like ScrapeNinja, which is a great example of this method:

- Install the Community Node: In your n8n instance, go to Settings > Community Nodes and install the node for the service you choose (e.g.,

n8n-nodes-scrapeninja). - Add the Node to Your Workflow: Once installed, you will find a new ScrapeNinja node in your node panel. Add it to your workflow.

- Configure the Scrape:

- Extract the Data: These services often include built-in parsers. You can provide CSS selectors directly within the node to extract the data you need, or you can pass the full, rendered HTML to a standard n8n HTML node for parsing.

Using a dedicated community node like this is the recommended approach for how to scrape with n8n on difficult sites because it removes a lot of complexity. The service handles the browser automation, proxy rotation, and anti-bot challenges for you, letting you focus on the data. This is more reliable and easier to maintain than managing a headless browser integration yourself.

From Data to Insight: Using AI for Post-Scraping Analysis

Scraping data is only the first step. The real value comes from what you do with it. After performing n8n html scraping, you’re often left with raw, unstructured text. This is where AI can transform your workflow into something truly powerful.

By connecting an AI node like OpenAI or Google Gemini after your HTML node, you can instantly clean, structure, and analyze the scraped content. For example, imagine you’ve scraped customer reviews for a product. The raw text is messy and varied. You can pass each review to an AI node with a prompt like:

“From the following review text, extract the sentiment (Positive, Negative, or Neutral), identify the key features mentioned, and assign a rating from 1 to 5. Respond in JSON format.”

The AI will return a clean, structured JSON object similar to this:

{

"sentiment": "Positive",

"features_mentioned": ["battery life", "screen quality"],

"rating": 5

}You can then take this structured data and insert it directly into a database or spreadsheet using nodes like Postgres or Google Sheets. This turns your scraping workflow into a powerful n8n data pipeline, transforming messy web content into valuable, analysis-ready insights automatically.

Practical Workflow: n8n Lead Generation Funnel Automation

Let’s put these concepts together to build a practical workflow for n8n lead generation. The goal is to find potential customers from a directory website and add them to a lead list, automating a task that would take hours to do manually.

- Scrape the Directory: Start by scraping a local business directory (e.g., a list of marketing agencies in a specific city). Use the HTTP Request or third-party scraping nodes and HTML nodes to extract the company name and their website URL for each listing.

- Find a Contact Email: For each company website you’ve scraped, use a service like Hunter.io to find a publicly listed email address. n8n features a dedicated Hunter node you can use for this purpose.

- Filter and Enrich: Add an IF node to filter out any companies for which you couldn’t find an email. For the valid leads, you could even use an OpenAI node to visit their website (using the scraping technique from step 1) and draft a personalized opening line for a cold email based on their “About Us” page.

- Add to Lead List: Use the Google Sheets or Airtable node to append the company name, website, and contact email to your master lead list.

This workflow automates the entire top-of-funnel process, saving your sales team hours of manual prospecting and allowing them to focus on building relationships.

n8n Scaling: Building Robust and Efficient Web Scraping Workflows

When your n8n web scraping project grows from a few pages to hundreds or thousands, your workflow design needs to evolve. A simple, linear workflow will quickly hit performance bottlenecks or get blocked. For successful n8n scaling, you need to build workflows that are modular, resilient, and respectful of the servers you are scraping.

Manage Concurrency to Avoid Overloading

A common mistake is to get a list of 1,000 URLs and immediately pass it to an HTTP Request node. By default, n8n will try to run all 1,000 requests in parallel, which can crash your n8n instance and will almost certainly get your IP address blocked.

The correct approach is to control the concurrency.

- Use the Loop Over Items Node: Before your HTTP Request node, insert a Loop Over Items node. Configure it to a small batch size, like 5 or 10. This ensures n8n only ever works on a small number of URLs at a time.

- Add a Wait Node: After each batch is processed, you can add a Wait node to pause the workflow for a few seconds. This throttling is useful for avoiding rate limits and appearing like a more natural user.

Break Down Large Workflows with Sub-Workflows

A single, massive workflow that does everything (fetches URLs, scrapes pages, cleans data, saves to a database) is difficult to debug and maintain. A much better practice for complex scraping tasks is to create smaller, modular sub-workflows.

- Main Workflow: A “master” workflow that orchestrates the overall process. Its job is simply to get the list of URLs and then pass each one to a sub-workflow.

- Scraping Sub-Workflow: This workflow is triggered by the “master” using an Execute Sub-Workflow node. It takes a single URL as input, performs the scrape, cleans the data, and then returns the structured result.

This modular design makes n8n troubleshooting much easier. If a specific part of the process fails, you only need to debug that one small, focused workflow.

Implement Robust Error Handling

For any large-scale scraping job, failures are inevitable. A website might be down, its layout might change, or your proxy might fail. Your workflow must be able to handle this without stopping entirely.

- Use Continue on Fail: For any node that might fail (like the HTTP Request or HTML nodes), go to its Settings tab and select the “Continue (using error output) in the “On Error” setting.

- Create an Error Path: This will create a second “error” output on the node. Connect this to a separate branch of your workflow that logs the error. For example, you can connect it to a Google Sheets node configured to append a new row to an “Error Log” sheet, saving the URL that failed and the specific error message. This allows you to review and re-process failed items later.

By adopting these scaling practices, you can build n8n web scraping workflows that are not only powerful but also stable, efficient, and easy to manage as your data needs grow.

n8n Web Scraping FAQ

- Is web scraping legal?

It’s a legal gray area. To stay on the right side of things, always check a website’srobots.txtfile, never overload a server with too many requests, and avoid scraping personal data or copyrighted content. When in doubt, use extra caution. - How do I handle sites that require a login?

For sites that require you to be logged in, you need to manage a session. You can do this by first logging in with your browser and using its developer tools (usually under the “Network” or “Application” tab) to find the session cookie. You can then copy this cookie value and add it as aCookieheader in your HTTP Request node settings. This will make your requests look like they are coming from your logged-in browser session. - What if the website’s layout changes?

This is the biggest challenge and an unavoidable reality of web scraping. If a website redesigns its pages, your CSS selectors will likely break, and your workflow will fail. The only solution is to go back into your HTML node and update the selectors to match the new layout. Building robust scrapers means accepting that they will require occasional maintenance to keep them running smoothly.