Docker is an open platform to run your application in a loosely isolated environment and thereby separate it from the infrastructure it’s running on. We all know the sentence “But it works on my machine” and Docker tries to solve this problem. The environment your app is running on in Docker, called a Container, can easily be replicated on both the same host and other hosts or machines. On the machine you want the Container to run on, all you need to have installed is Docker itself. You can find more information about Containers and the differences to Virtual Machines in this article.

Why should I use Docker?

There are many scenarios where Docker helps you to improve your workflows to consistently deliver your applications – fast and secure:

- Development: When your developers code something locally and want to share their work, it might be complicated to replicate the exact same environments on the other machines (e.g. different operating system, required software is not installed, …).But if the application is packaged in a standardized environment – in this case a Docker container – the other machine only needs to have Docker installed and the application can run on the other machine as well, because the environment gets replicated by Docker.

- Deployment: The part above also applies to the production environments: Whenever the software is fully tested and ready for deployment, all it takes is to upload the updated container.

- Portable: To run a container, only Docker is required. This means, you can run a container on the developer’s local machine, a physical or virtual server in a data center, or on many cloud providers. No specific environment is required, because Docker will replicate the environments that’s needed for the application to run.

To put it all together, Docker helps you to simplify development by creating the exact environment required for the app to run and thereby also makes the deployment of the app more reliable and consistent.

And what is Cloud-Init, again?

You can imagine Cloud-Init as Docker but for cloud-instances rather than for applications. A Cloud-Init config describes the desired state of the instance and Cloud-Init will set up the instance exactly as described. Cloud-Init has many built-in modules to customize nearly every behavior of your instance, like setting up users and groups, adding SSH-Keys, installing software, or simply executing commands or creating files.

But Cloud-Init is not just there to automate a single installation of a new VPS, instead it helps you set up a bunch of new instances at scale. You define the config once, and then use it multiple times to set up new instances. This can save you time that you may not have in the event of a disaster or when you need more instances to handle rapidly growing workloads.

If you want to learn more about the fundamentals of Cloud-Init and why it’s so cool, read our introduction to Cloud-Init here.

Why should I use Docker with Cloud-Init?

Imagine your app is standardized in Docker, which helped you in the development process, and now it’s time to deploy it. You don’t have to care much about your app itself, because you created a Docker container and Docker will create the exact environment needed for your app to run. But before this can happen, Docker needs to be installed on your server. And to install Docker on your server, you first have to set it up, install all the required packages, maybe do some security stuff here, configure something there – it takes some time before you can actually deploy your app. And Cloud-Init helps you to automate this process by setting up the server (including the Docker installation) and then, when the server is ready, Docker can start your app. That’s cool, isn’t it? And although at Contabo you can get Docker preinstalled with the OS, there are reasons to use cloud-init instead,such as a more in-depth customization of the cloud instance. So let’s get to work and create a Cloud-Init configuration file to help us with that.

Get started with Docker

To follow along, you need to have Docker installed on your system. Head over to the Docker documentation for installation options for each operating system.

Create a demo project

To keep it simple, we’re creating a simple NodeJS app that uses express as the webserver to display a “Hello world” message. Make sure you have NodeJS installed and run the following commands in your terminal:

npm init -y

npm install express

This will set up a new node project and install express. Then create a index.js file, that’s where we write the code:

const express = require("express");

const app = express();

// Use PORT specified in the environment variables or use 3000 as fallback

const PORT = process.env.PORT || 3000;

// Listen to incoming GET requests

app.get("/", function(request, response) {

response.send("Hello from NodeJS in Docker!");

});

// Start the app on the specifiec port

app.listen(PORT, function() {

console.log(`App is running on http://localhost:${PORT}`);

});

We can test it now by running node index.js, but let’s add this as a command to the package.json:

{

"name": "docker-cloudinit-sample",

"version": "1.0.0",

"description": "",

"main": "index.js",

"scripts": {

"start": "node index.js"

},

"keywords": [],

"author": "",

"license": "ISC",

"dependencies": {

"express": "^4.18.1"

}

}

Now we can start our app with the following command:

npm run start

The Dockerfile

We know how to run the app and what needs to be installed, but Docker doesn’t. Docker reads this information from the Dockerfile, so let’s create one (filename is Dockerfile). We start with an image, but instead of using something like Debian or Ubuntu where we have to install NodeJS ourselves, we can use the node image. In this image, NodeJS is already installed.

FROM node:16Next, we need a directory to work in:

WORKDIR /usr/src/appNow keep in mind that Docker will cache the steps specified in the Dockerfile. So in the next step we only copy the package files (package.json and package-lock.json) and install the dependencies. This means, Docker will not re-install the dependencies everytime we change something in the sourcecode – this step will only be re-executed when the package files change.

COPY package*.json ./

RUN npm install

# Use this in production:

# RUN npm ci --only=production

All that’s left now is to copy the code:

COPY . .We can now also specify the port the app should run on. Keep in mind that this port is the internal port inside the container. To access it from outside of the container, we can map it to another port that’s accessible from outside later when creating the container. In this example, the NodeJS app uses port 80 inside the container.

ENV PORT=80To start the app, we need to run the command we specified when creating the NodeJS app:

CMD npm run startHere is the final Dockerfile:

FROM node:16

WORKDIR /usr/src/app

COPY package*.json ./

RUN npm install

COPY . .

ENV PORT=80

CMD npm run start

But keep in mind that Docker will install all the required dependencies. So we want to create a .dockerignore file to ignore the node_modules folder (add everything to this file that should be ignored by Docker, e.g. logs):

# .dockerignore

node_modules

Creating the image

The image can be created using a single command:

docker build . -t <your username>/node-web-app

Use . as second argument if you run the command in the app’s directory or replace it with the path to the app. The -t flag allows you to specify a name for the image. We’ll need this later when spinning up containers and pushing it to the registry.

Notice: You may face an error when trying to use that image on the server because of the platform (linux/amd64 and linux/amd64/v8). You can resolve this issue by specifying the platform in the build process (append –platform linux/amd64 to the command).

To test the image, we can spin up a new container using the image we’ve just created:

docker run -p 5000:80 -d <your username>/node-web-app

The -p flag allows us to map a port that’s accessible from outside to a port inside the container. Remember when we set the port to 80 in the Dockerfile? 5000:80 tells Docker that we want to redirect the public port 5000 to the private port 80 inside the container. This means, when we request <ip>:5000, the request will be sent to this container and treated within the container as a request to port 80.

If you open your browser on localhost:5000, you should be able to see “Hello from NodeJS in Docker!”.

Image hosting

Before we can use the image on the production server, we need to host it somewhere. A popular space for that is the Docker Hub. Here are already many ready-to-use Docker images hosted (for example the node image we used while creating the Dockerfile) and you can also upload your own images. Another option is to use other registries like AWS ECR.

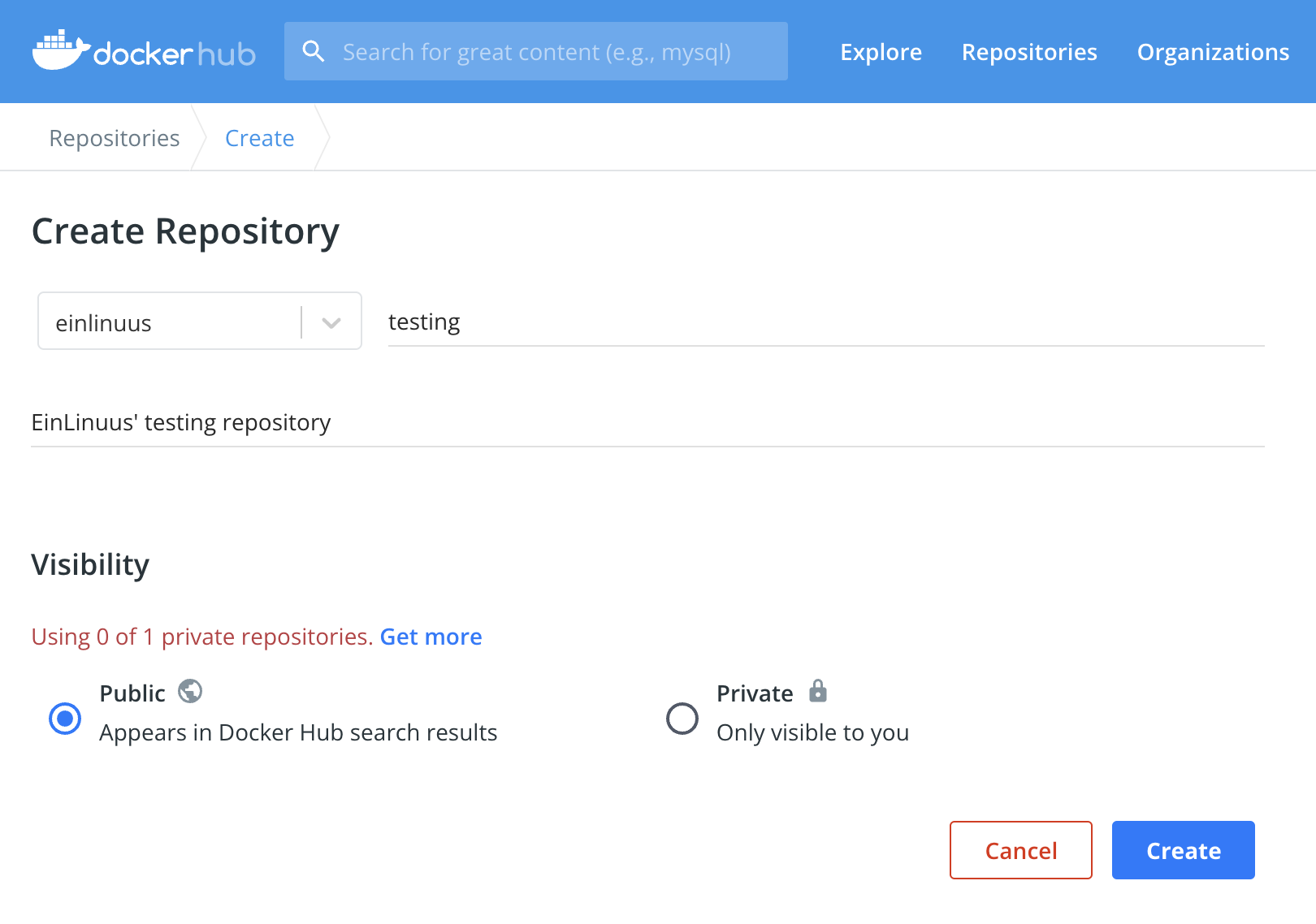

For this guide, let’s create a free account on Docker Hub and publish the image there. After you’ve created your account, click on the “Repositories” tab and create a new one. One repository can contain many images (stored as tags).

We push images to the registry using the Docker CLI, just as we did to create the image. But before the push command is available, we have to login using docker login.

In order to push the image, make sure it’s named exactly like your repository. You can also add a tag here:

docker tag <your username>/node-web-app <dockerhub repo name>:<tag>

The final command looks something like this:

docker tag einlinuus/node-demo-app einlinuus/testing:node

And this image can now be pushed to the Docker Hub:

docker push <dockerhub repo name>:<tag>

Deploying Docker using Cloud-Init

For this guide, we’ll use the image we just uploaded to Docker Hub as example. If you skipped that part, use the following Docker image:

This is the exact image we created and uploaded in the “Get started with Docker” steps above.

Convenience script

Docker can be installed using different methods, but we’ll start with the convenience script:

#cloud-config

runcmd:

- curl -fsSL https://get.docker.com | sh

- docker pull einlinuus/testing:node

- docker run -d -p 80:80 einlinuus/testing:node

That’s a pretty easy config since we’re only using one module (runcmd) with 3 commands:

- Download the script using cURL and execute it

- Pull the Docker image from Docker Hub and save it locally

- Run the downloaded image and map port 80 to port 80 in the container (so our app is accessible from the default HTTP port with no port specification required)

And that’s basically all you need to install and set up Docker with Cloud-Init. But as stated in the official Docker documentation, the convenience script is not recommended in production environments. And since our goal is to make the server ready for production, let’s also take a look at another installation method.

Docker repository

In order to install Docker using this method, we first need to add the Docker repository. After that, the required packages can be installed just like any other package:

#cloud-config

package_update: true

package_upgrade: true

packages:

- apt-transport-https

- ca-certificates

- curl

- gnupg-agent

- software-properties-common

runcmd:

- curl -fsSL https://download.docker.com/linux/debian/gpg | apt-key add -

- add-apt-repository "deb [arch=$(dpkg --print-architecture)] https://download.docker.com/linux/debian $(lsb_release -cs) stable"

- apt-get update -y

- apt-get install -y docker-ce docker-ce-cli containerd.io

- systemctl start docker

- systemctl enable docker

- docker pull einlinuus/testing:node

- docker run -d -p 80:80 einlinuus/testing:node

The script above works for Debian, make sure to replace “debian” with “ubuntu” if your server is running Ubuntu.

Now this config may look way more complex than it actually is:

- Update & Upgrade the packages (using the default package manager)

- Install the following packages: apt-transport-https, ca-certificates, curl, gnupg-agent, software-properties-common. These packages are required for either Docker itself or the installation process

- Add the Docker repository to the apt-repository list

- Update the repository list

- Install the following packages: docker-ce, docker-ce-cli, containerd.io. This was not possible before (step 2) since the Docker repository was not added

- Start and enable the Docker service

And the last two commands are similar to the last installation method: Download the image & start a new container using that image.