A full postmortem including the timeline of the incident, the steps we took to resolve it, and the measures to improve our reaction in the future.

What Exactly Happened?

On September 2, 2024, all VPS, dedicated servers and object storage instances in Nuremberg Data Center became unavailable. Customer Control Panel, communication with support over email and phone, as well as placing new orders were not functional.

Why Did Customers’ Instances and Contabo Systems Become Unavailable?

The customers’ instances and Contabo systems (such as Customer Control Panel and support channels) became unavailable because they were powered down to avoid the temperature inside the data center to exceed 40°C (104°F) which is a maximum temperature for our servers and network devices to operate. This is done also to prevent damage to HDD, SSD and NVMe storage which could lead to data loss.

Why Was the Temperature Inside the Nuremberg Data Center Increasing?

The temperature inside the Nuremberg Data Center was increasing, because the air conditioning system was not cooling the air inside the data center. Servers generate heat as they operate and without the air conditioning working the temperature increased above the safe limit. The high outside temperatures made the situation even worse.

Why Was the Cooling System Not Working?

The cooling system stopped cooling the air because it was switched off automatically and did not turn on again.

Why Was the Cooling System Shut Down Automatically?

The cooling system was shut down automatically because the Nuremberg data center switched to uninterruptible power supply (UPS) as an emergency power supply. It is a standard process to shut down the cooling system when the data center power is provided by UPS and to turn it back on few seconds later once either diesel generator takes over or public grid power supply is reestablished.

Why Did the Nuremberg Data Center Switch to UPS as Emergency Power Supply?

There was a voltage fluctuation on the local electricity grid. This triggered our systems to switch to UPS to provide continuous power supply and to shut down the cooling system temporarily. The UPS was activated and provided power for 3 seconds when the primary power supply took over again.

Why Was There Voltage Fluctuation on Local Electricity Grid?

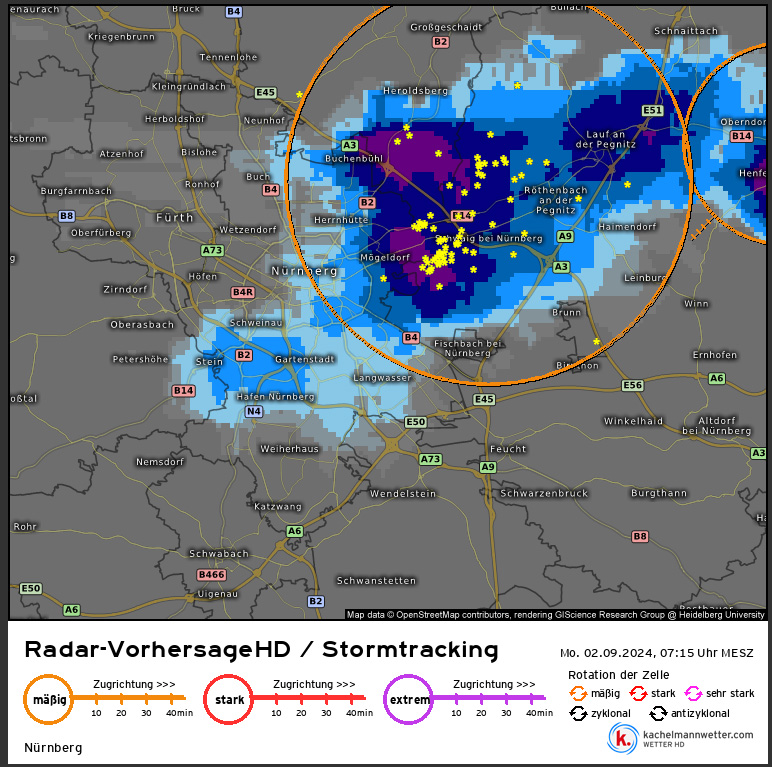

The voltage fluctuation on the local electricity grid was caused by a severe thunderstorm with lightning strikes in the whole Franconia area, particularly around Nuremberg.

Weather report / Franconia Map with Storm Overview

Our data center is equipped with lighting rods to protect our data center from the effect of a direct lighting strike. Obviously, lightning rods are not able to mitigate the effect of lightning strikes which hit other structures such as transmission lines sometimes located several kilometers away from our building.

Why Didn’t the Cooling System Turn Back on Automatically?

The cooling system did not turn back on automatically because of the malfunction in the control bus. Also, our manual attempts to restart the cooling systems were unsuccessful. The cooling was reestablished only after a hard reboot was performed by the authorized technician from the company which provided the chilling units.

Exact Timeline of the Event

The following is a timeline of the incident, CEST time, detailing our response and the key actions taken to restore services:

Sep 2, 2024 07:14 AM: Power fluctuations detected, power supply automatically switched to UPS. Servers continue to work, cooling systems shut down.

Sep 2, 2024 07:14 AM: Power supply from grid reestablished after 3 seconds, cooling system does not restart.

Sep 2, 2024 07:14 AM: Monitoring alert about switching back and forth to UPS and chillers being down sent to data center staff. The incident process started. Temperature starting to increase.

Sep 2, 2024 07:33 AM: Monitoring alert that first server room has reached critical temperature, staff assess the situation.

Sep 2, 2024 08:13 AM: First Contabo systems shut down.

Sep 2, 2024 08:41 AM: On-site team assessed they cannot manually turn on cooling systems. A technician from the cooling systems company is summoned shortly afterwards. The technician is not immediately available, already being dispatched to other businesses in the area affected by a similar issue.

Sep 2, 2024 11:30 AM – 12:08 PM: The temperature exceeds secure threshold in one server room after another, servers shut down to prevent damage and data loss.

Sep 2, 2024 12:55 PM: Rain stops allowing smoke protection flaps to be opened for ventilation. Industrial ventilators activated to move hot air faster. Temperature starts to lower.

Sep 2, 2024 13:55 PM: Core network links and components are restored.

Sep 2, 2024 14:25 PM: Cooling system restarts following a third-party technician’s visit.

Sep 2, 2024 15:05 PM: Servers are back online gradually as temperature continues to decrease.

Sep 2, 2024 15:30 PM: Object Storage cluster is back online.

Sep 2, 2024 15:42 PM: Contabo systems, including the Customer Control Panel, are fully restored.

Sep 2, 2024 18:00 PM: 95% servers are back online.

Sep 3, 2024 19:55 PM: Incident resolved. Individual reports of virtual and dedicated servers issues handled by technical support team as usual.

What About Redundancy?

All critical systems in the Nuremberg data center were designed with N+1 redundancy. This means, that for example if the data center needs 2 chilling units for air conditioning (N=2), 3 units were installed instead (N+1 = 2+1 = 3). The same principle applies to other critical systems like power or internet connectivity. The abovementioned switching to UPS power was an example of the redundancy of power supply in action. Unfortunately, the redundancies in place were not able to prevent the outage as described above.

What About Fallback for Contabo systems (such as Customer Control Panel or support channels)?

We do have a business continuity process for Contabo systems (such as Customer Control Panel or support channels), and it was activated as planned, but before we switched to the alternative locations the systems in Nuremberg were restored.

Lessons and Remediation

First, we decided to migrate all customers from Nuremberg to our newly built Hub Europe data center. This facility is designed to reach availability of 99.982% required for Tier 3 data centers, provides more robust fail-safes against incidents like the one described above. The process has already started and affected customers are being contacted directly.

Secondly, we will revisit our disaster recovery plans and fallback procedures for Contabo systems such as Customer Control Panel and support channels to ensure their higher availability even when we are facing incidents.

Thirdly, we are revising our incident response process to resolve incidents faster and keep customers better informed during incidents. We recognize that our partners rely on us and we are actively working to embody the German quality that is at our core.

Once again, we thank our customers for their patience and understanding during this event, and we assure them that we are committed to preventing similar issues in the future. We are going to be more vocal about all actions we take to safeguard the uptime of your servers in all our data centers around the globe.