You’ve already seen how workflow automation can connect your apps and handle repetitive, rule-based tasks. But what if your automations could do more than just follow instructions? What if they could understand context, generate creative content, summarize complex information, and even make decisions based on unstructured data? This is where the real power of n8n AI comes into play. By integrating Large Language Models (LLMs) directly into your workflows, you transform n8n from a simple task runner into an intelligent automation engine.

Pairing n8n and OpenAI or other models with your workflows opens up a new world of possibilities. Suddenly, you can build an AI automation workflow that doesn’t just process data but enriches and understands it. This guide is for those ready to take the next step. We’ll explore how to use any LLM with n8n, from building a simple content generator to conceptualizing sophisticated AI agent automation. You’ll learn the practical skills to create automations that think, reason, and create, all within the flexible environment of n8n.

The Power of AI Automation with n8n: From Simple Tasks to Complex Agents

At its core, the power of n8n AI lies in its ability to move beyond simple, deterministic logic. A traditional workflow operates on clear rules: “If a new email has the subject ‘Invoice,’ save the attachment to the ‘Invoices’ folder.” It’s predictable and efficient for structured tasks. An AI automation workflow, however, operates on understanding and intent: “When a new email arrives from a client, determine if it’s a complaint, a question, or a compliment, and route it to the appropriate team.” This requires a level of comprehension that only AI can provide.

What Can You Achieve with AI Automation?

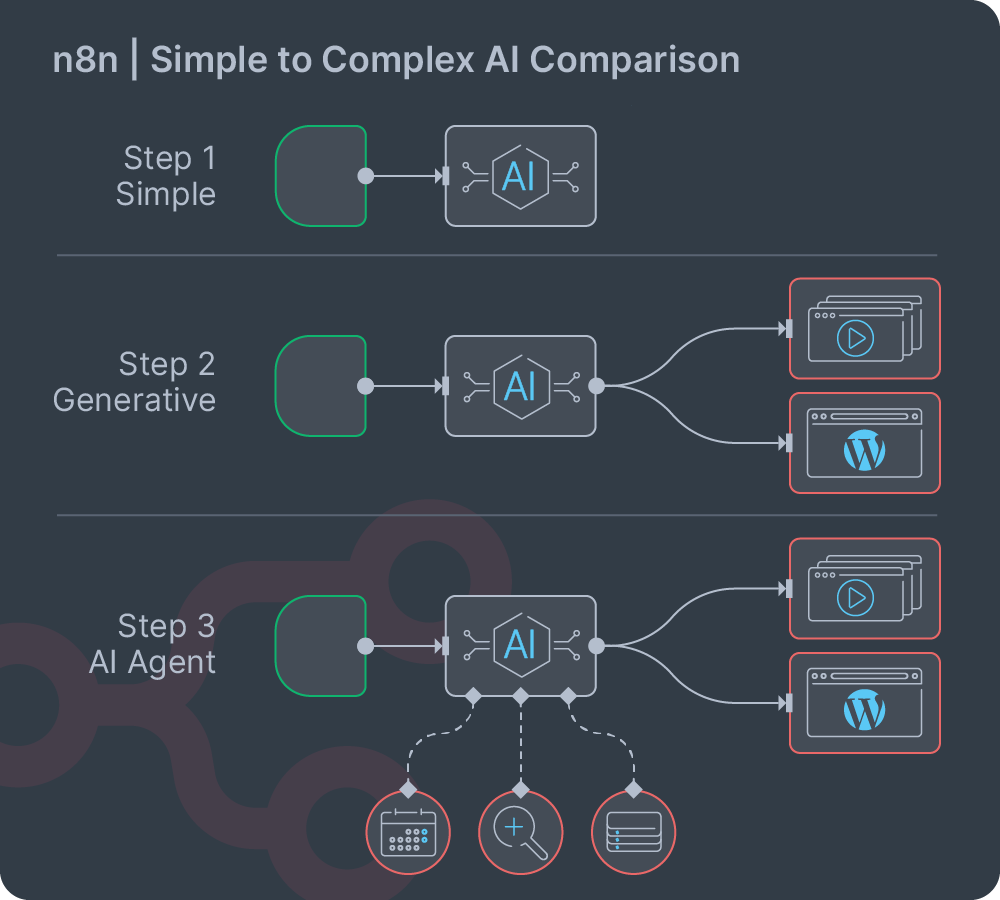

This capability creates a spectrum of automation possibilities, from simple enhancements to fully autonomous agents:

- Simple AI Enhancement: This is the most common starting point. You can add an AI node to an existing workflow to perform a single, specific task. For example, after scraping a product review, you could use an LLM to extract the sentiment (positive, negative, neutral) and the key topics mentioned.

- Generative Tasks: Instead of just analyzing data, your workflows can create new content. You could build a workflow that takes a product name and a few key features as input and then generates a full marketing description, a social media post, and a short video script.

- Complex Reasoning with Agents: This is the most advanced application. You can design n8n agents – workflows that can perform multi-step tasks with a degree of autonomy. For instance, an AI agent could be tasked with “planning a weekend trip to Paris for two people on a $500 budget.” It would need to search for flights, find hotels, check reviews, and present a viable itinerary, all without being given explicit, step-by-step instructions. This agentic behavior is the frontier of AI automation.

By integrating AI, you are no longer limited by the structured data and predefined rules of your applications. You can work with the messy, unstructured world of human language and complex documents. This unlocks a new level of efficiency and capability.

Key n8n AI Capabilities: RAG, Long-Term Memory, and Multi-Agent Systems

As you move beyond simple AI tasks, you’ll encounter advanced concepts that unlock the true potential of intelligent automation. In n8n, these are not single-click solutions but powerful systems you construct by combining specific nodes.

n8n Retrieval-Augmented Generation (RAG)

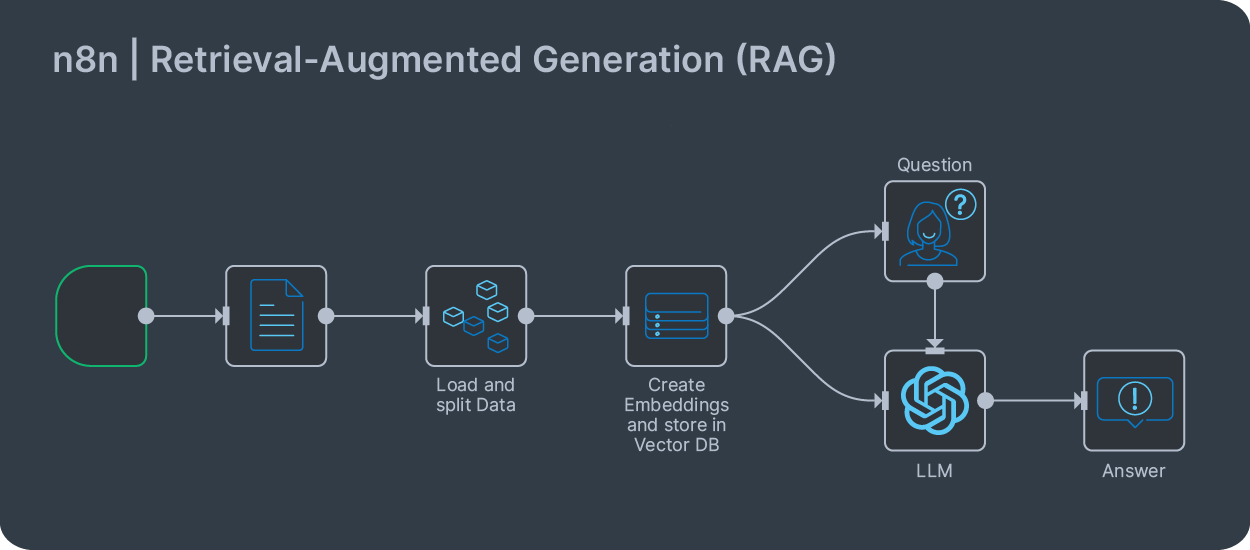

A standard LLM can’t answer questions about your private company data. n8n retrieval-augmented generation (RAG) solves this by giving the LLM access to your information. You build a RAG pipeline by chaining several nodes together:

- Load Data: You start with a Document Loader node, such as “PDF4me” or “ScrapeNinja”, to ingest your source material.

- Split Data: You then connect a “Split Out” or “Information Extractor” node to break the text into smaller, manageable chunks.

- Create and Store Embeddings: These chunks are passed to a Vector Store node like “Pinecone”. This node helps to convert the chunks to vectors and upserts them into your database.

- Query Data: To ask a question, you use a Chain node, such as the “Question and Answer Chain” node. This node takes your query, finds the most relevant chunks in the vector store, and passes both the query and the chunks to your chosen LLM to generate a fact-based answer.

Building Smarter n8n Agents with Long-Term Memory

For an AI to handle a real conversation, it needs “memory.” You can design stateful n8n agents that remember past interactions. While short-term memory is often built-in, true long-term memory is achieved by storing conversation history in an external database.

Here’s a simplified view of how it works: Before calling the “AI Agent” node, you would use a database node (like “Airtable” or “Postgres”) to retrieve past conversation summaries linked to a user ID. You would then inject this retrieved “memory” into the prompt you send to the agent, giving it the full context it needs to provide a coherent, personalized response.

The Power of Agents and LangChain

Many of n8n’s most powerful AI capabilities are built using the LangChain framework. LangChain is an open-source tool that simplifies the process of creating complex applications with language models. It provides a standard way to chain together components like LLMs, memory, and tools, allowing them to work together to solve complex problems.

The concept of n8n LangChain integration means that you don’t need to be a Python developer to use this powerful framework. n8n has done the hard work of building this logic into its visual interface. When you use the “AI Agent” node, you are interacting with a user-friendly layer built on top of LangChain’s core principles.

This allows you to create powerful n8n agents by simply connecting nodes. For example, you can give your AI Agent a “tool” by connecting the “SerpApi“ node to it. When the agent receives a query it can’t answer from its base knowledge, it can independently decide to use the search tool to find current information and then use that new knowledge to formulate its response. This powerful reasoning and decision-making capability is the essence of what the LangChain framework enables within n8n.

Integrating AI Models: Connecting n8n to OpenAI, Gemini, and Other LLMs

n8n’s flexibility allows you to connect to virtually any AI model that has an API. Whether you want to use a major commercial model or a self-hosted open-source alternative, the process is straightforward.

Connecting to OpenAI

The dedicated n8n OpenAI node makes integration incredibly simple.

- Add the Node: In your workflow, add the “OpenAI” node. Select the option “Message a model” from the list.

- Add Credentials: You’ll need to create a new credential by providing your OpenAI API key.

- Configure the Node:

- Resource: Choose “Text” for conversational models.

- Model: Select the specific model you want to use from the dropdown (e.g.,

gpt-4-turbo,gpt-3.5-turbo). - Prompt: Enter the prompt you want to send to the model. You can insert dynamic data from previous nodes here.

Connecting to Google Gemini

The process for the n8n Google Gemini node is very similar.

- Add the Node: Add the “Google Gemini” node to your workflow.

- Add Credentials: Create a new credential using your Google Gemini API key.

- Configure the Node: Select the model (e.g.,

gemini-pro) and input your prompt. The interface is designed to be consistent and intuitive across different AI model integrations.

Connecting to Any Other LLM

What if you want to use a smaller, open-source model you’re running yourself, or another commercial provider that doesn’t have a dedicated node? You can connect any n8n LLM using the generic “HTTP Request” node.

- Add the Node: Add the “HTTP Request” node.

- Configure the Request:

- Method: Set to

POST. - URL: Enter the API endpoint for the LLM you are calling.

- Authentication: If your API requires an authentication header (like a Bearer Token), add it under “Authentication” > “Header Auth”.

- Body: Turn on the “Send Body” option. In the “Body Content Type” section, select “JSON”. This is where you will construct the request payload, which typically includes the model name and the prompt. The exact structure will depend on the API’s documentation. For example:

- Method: Set to

{

"model": "mistral-7b-instruct",

"messages": [

{

"role": "user",

"content": "{{ $json.prompt }}"

}

]

}This method gives you the ultimate flexibility to integrate any AI model into your n8n workflows.

Practical AI Workflow Examples: Content Generation, Data Analysis, and More

Let’s move from theory to practice. Here are a few real-world examples of AI workflows you can build today.

Workflow 1: Automated Content Generation

This n8n content generation workflow can take a simple topic and turn it into a structured draft, saving hours of initial writing time.

- Trigger: Start with a “Manual” trigger for testing. The input could be a JSON object with a topic:

{"topic": "The Benefits of Self-Hosting a VPN"}, or even a simple “Chat Trigger” node. - Generate Outline: Pass the topic to an OpenAI node with a prompt like: “Create a detailed blog post outline for the topic:

{{ $json.topic }}. The outline should include an introduction, 3-4 main sections with sub-points, and a conclusion. Respond in JSON format.” - Generate Section Content: Use a “Split Out” node to process each main section from the outline. For each section, call the OpenAI node again with a prompt like: “Write a 300-word section for a blog post based on the following outline point:

{{ $json.section_title }}. Maintain a helpful and direct tone.” - Assemble Draft: Combine the generated content from all sections using a “Merge” node.

- Save Draft: Use the “Google Docs” node to create a new document in your Google Drive and populate it with the fully assembled draft.

Workflow 2: Intelligent Data Analysis and Summarization

This n8n data analysis workflow can analyze raw data and provide you with human-readable insights.

- Trigger: Use the “Google Sheets” trigger to run the workflow whenever a new row is added to a specific sheet containing customer feedback.

- Analyze Feedback: Pass the feedback text to a Gemini node with a prompt: “Analyze the following customer feedback:

{{ $json.feedback }}. Extract the sentiment (Positive, Negative, or Neutral), identify the key topics mentioned, and provide a one-sentence summary. Respond in JSON format with keys:sentiment,topics,summary.” - Enrich Sheet: Use the “Google Sheets” node again, this time in “Update” mode, to write the sentiment, topics, and summary back into the corresponding row in your sheet.

- Alert for Negative Feedback: Add an “IF” node to check if the sentiment is “Negative”. If it is, use the “Slack” node to send an immediate alert to the customer support channel with a link to the feedback row.

This is a powerful n8n AI workflow that turns a simple spreadsheet into an intelligent feedback analysis system.

Building an AI-Powered Web Scraping and Data Pipeline

Let’s combine several of these concepts into a single, powerful project: a pipeline that monitors websites for new information, uses AI to understand it, and then stores it as structured data.

The goal is to automate the process of market research by scraping news articles related to a specific industry and building a clean, searchable database of insights.

Workflow Outline

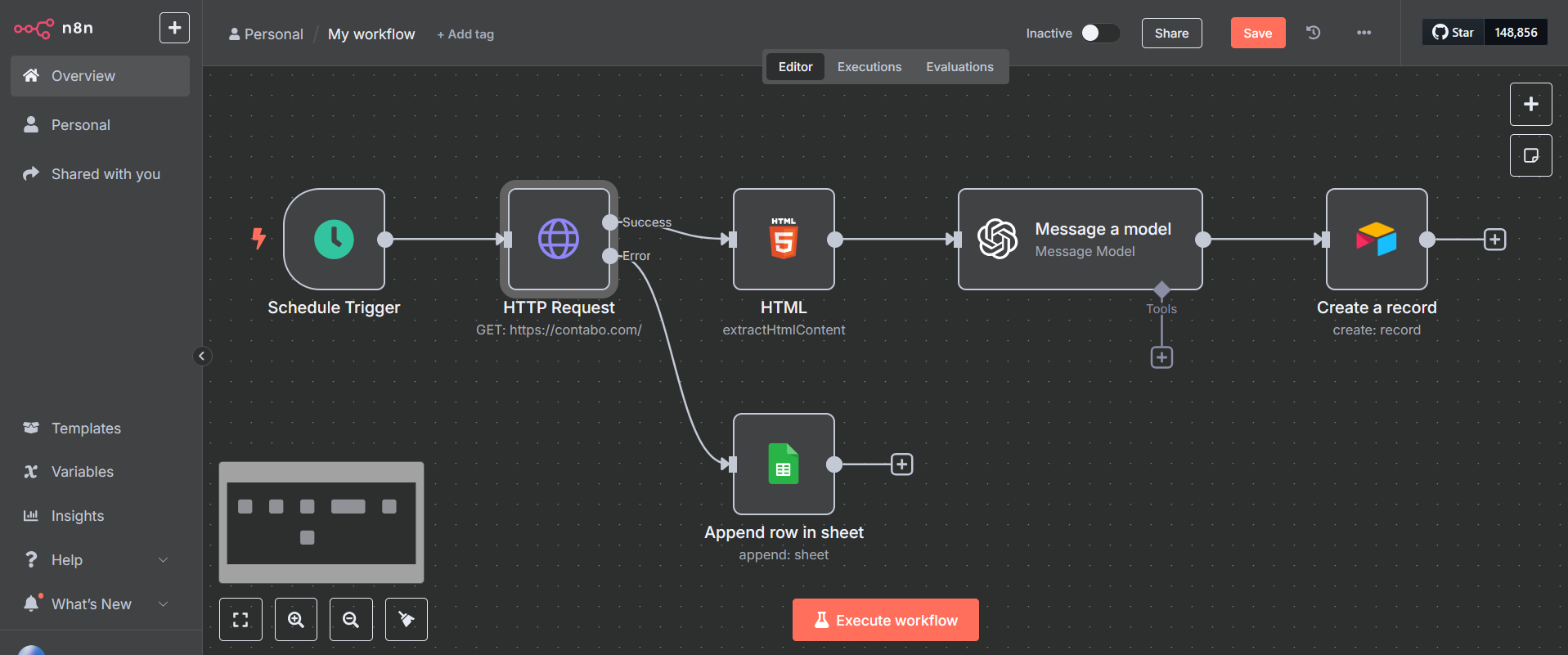

- Trigger and Fetch URLs: Start with the “Schedule Trigger” node to run the workflow automatically. You can configure this node to run at any interval, for example, once a day at 9:00 AM. This trigger will then feed into a step that fetches a list of target news sites from a Google Sheet or an Airtable base.

- Scrape the Content: Native n8n web scraping is typically a two-step process. First, use the “HTTP Request” node to make a

GETrequest to the article URL. This will fetch the raw HTML of the page. Next, pass that HTML to the “HTML” node. Inside this node, set the Operation to “Extract HTML Content”. This powerful operation lets you use CSS selectors (likeh1,.article-body p, or#main-content) to pull out the exact pieces of content you need, such as the title and body text.

Note: You could also choose from a variety of third-party nodes for web scraping, each with their own functionality. There’s often more than one way to get things done with n8n. - Process with AI for Structuring: Pass the extracted text to an n8n AI node (like OpenAI). This is where the real intelligence happens. Use a carefully crafted prompt to ask the AI to act as a data analyst:

“From the following article text, extract the following information in JSON format:company_names_mentioned,key_technologies_discussed,publication_date, and a200-word_summary. If a piece of information is not present, return null for that key.” - Store the Structured Data: The AI node outputs clean, structured JSON, ready to be stored. To do this, add a database node like the “Airtable” node.

- Configure the Node: Select your Airtable credentials and set the Operation to “Create”. Choose the Base and Table where you want to store the results.

- Map the Fields: The node will display the fields from your Airtable table. Now, you map the data from the AI’s output to these fields. For a field in Airtable named “Companies,” you would add the

company_names_mentionedvalue from the AI node’s output. It will look something like this:{{ $json.company_names_mentioned }}. Repeat this for all the data you extracted.

- Verification and Error Handling (Optional): Workflows can fail, especially when relying on external websites and APIs. It’s important to build in resilience.

- Identify Failure Points: The “HTTP Request” and the “AI” nodes are the most likely to fail (e.g., a website is down, an API key is invalid).

- Implement Inline Error Logging: Click on the “HTTP Request” node, go to the Settings tab, and change the On Error option to “Continue (using error output)”. The node will now have two output connectors: one for success (top) and one for error (bottom).

- Create an Error Path: Connect the bottom “error” output to a “Google Sheets” node. Configure this node to “Append” a new row to a log sheet. In the fields, you can map the URL that failed and the specific error message, which is available in the expression editor under

$json.error.message. This creates a simple but effective log of any URLs that couldn’t be processed, so you can review them later without the entire workflow stopping. You can apply this same pattern to any node that might fail.

This workflow is a perfect example of how n8n can orchestrate a complex process, using AI to bridge the gap between unstructured web content and a structured, valuable dataset.

From API to Automation: Creating Custom n8n AI Workflows

While the pre-built nodes offer incredible power, the true potential of n8n is unlocked when you treat it as a programmable automation backend. By interacting with the n8n API, you can create n8n custom workflows that integrate seamlessly with your own applications and services.

Imagine you have a custom-built CRM. You can add a button in your CRM’s interface that, when clicked, triggers an n8n workflow to perform an AI-powered analysis on that customer’s history. Here’s how you would set it up:

- Create a Webhook Trigger: Start your workflow with the “Webhook” node. This will generate a unique URL.

- Build the AI Logic: Build the rest of your workflow to perform the desired task. For example, it could retrieve all past support tickets for a customer ID, pass them to an n8n LLM with a prompt to “summarize the customer’s top 3 issues and overall sentiment,” and then post the summary to a Slack channel.

- Call the Webhook from Your App: In your application’s code, make a

POSTrequest to the webhook URL whenever the button is clicked. You would pass the customer ID in the body of the request. Here’s an example usingcurl:

curl -X POST -H "Content-Type: application/json" -d '{"customerId": "12345"}' https://your-n8n-instance.com/webhook/your-webhook-idThis approach allows you to embed AI-powered automations directly into your existing tools, creating a seamless user experience. You are no longer limited by the built-in triggers; your custom applications can now serve as the starting point for any workflow.

n8n AI Workflows FAQ

- What are the costs associated with using AI models in n8n?

n8n itself does not add any extra charges for using its AI nodes. However, you are responsible for the costs charged by the AI model provider. For example, if you use the OpenAI node, you will be billed by OpenAI based on your API usage (the number of tokens you process). - Is my data secure when using n8n with AI?

This is an important consideration. When you use a cloud-based AI model like OpenAI or Gemini, the data you send in your prompt is processed on their servers. While these companies have strict privacy policies, the data does leave your infrastructure. For maximum security and data privacy, the best solution is to use a self-hosted open-source LLM on your own servers and call it using the HTTP Request node. - Can I use open-source LLMs with n8n?

Absolutely. If you are running an open-source model locally or on your own server (e.g., using a tool like Ollama), it will typically expose an API endpoint. You can use the “HTTP Request” node in n8n to call this endpoint, giving you the power of modern LLMs with complete data control.