This practical guide shows you how to measure and maximize WireGuard performance on a VPS or dedicated server. You’ll build a reliable baseline vs. tunnel benchmark, fix high-impact variables (MTU/MSS, parallel streams, GRO/GSO, CPU tuning), and configure a clean, reproducible server interface. We’ll then cover some advanced concepts such as offload-aware tunneling, parallelism, NUMA/frequency, and network topology details, and wrap with a concise FAQ. Follow the checklists and verified commands to turn theory into repeatable results.

Introduction: Unlocking WireGuard Performance

WireGuard performance can be exceptional when properly configured. While WireGuard is fast by design, achieving peak speeds requires attention to key factors: CPU characteristics, correct MTU settings, and rigorous benchmarking methods. Many WireGuard performance issues stem from simple misconfigurations like an incorrect MTU (Maximum Transmission Unit) that fragments packets, or single-stream tests that miss multi-core capabilities .

Always test after each change to ensure improvements and maintain a clear rollback path.

The Science of WireGuard Speed: Why It’s Faster

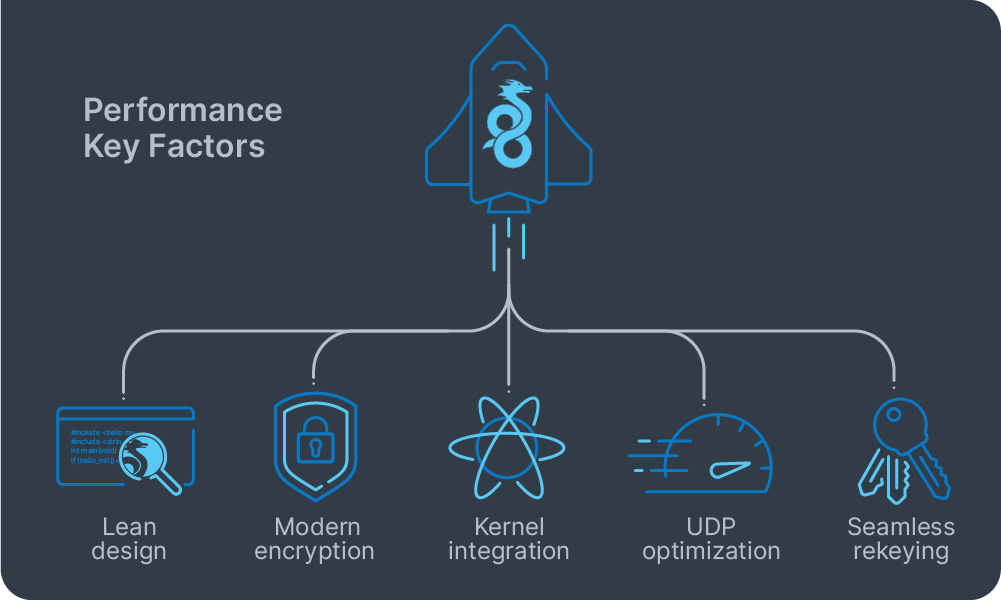

WireGuard speed comes from three fundamental design principles: simplicity, modern cryptography, and smart placement in the operating system. Understanding these factors helps explain why WireGuard consistently outperforms traditional VPN protocols.

WireGuard achieves its performance through several key factors:

- Lean design: ~4,000 lines of code (compared to OpenVPN’s tens of thousands), making audits and optimization simpler.

- Modern encryption: Uses ChaCha20-Poly1305, which runs efficiently on all processors, unlike AES that requires hardware acceleration (AES-NI) for optimal speed.

- Kernel integration: Processes packets without expensive context switches.

- UDP optimization: Takes advantage of built-in network acceleration features.

- Seamless rekeying: Keys rotate automatically via short handshakes every few minutes or after message thresholds, without interrupting flows.

With multi-queue network cards, different connection flows can be distributed across multiple CPU cores. This means WireGuard can scale performance by using parallel processing instead of hitting single-core limits.

Performance Verification Commands

Before you start tuning, verify that your system is using the fast path WireGuard was designed for:

Check the kernel version (WireGuard built-in from 5.6+):

uname –r Verify the WireGuard module:

modinfo wireguard

lsmod | grep wireguard

sudo modprobe wireguard Check the network offloads:

ethtool -k eth0 | grep -E 'gro|gso|tso' Test the multi-core scaling:

iperf3 -P 4 WireGuard Benchmark: Beyond a Simple Speed Test

A reliable WireGuard benchmark requires much more than a quick WireGuard speed test or single-stream measurement. The gold standard is a methodical comparison between no-VPN baseline throughput and WireGuard tunnel performance, using parallel streams to show the protocol’s multi-core scaling, plus measurement of both TCP and UDP behavior. Consistency is critical: test conditions (hosts, routes, MTU, stream count, and duration) must be identical in both scenarios for results to be meaningful.

For most cases, iperf3 is the primary tool, measuring TCP (Transmission Control Protocol) and UDP (User Datagram Protocol) throughput with both single and multiple simultaneous streams (-P). irtt is valuable for in-depth latency and jitter analysis, while simple system counters (like top, mpstat, or ip -s link) help flag CPU or packet drop issues during runs. Optional tools like Netdata can provide a real-time dashboard but aren’t a requirement for sound benchmarking.

Core Testing Methodology

Start by running an iperf3 test between client and server endpoints over the raw network, without WireGuard. Measure both single-stream (c) and parallel-stream (-P 4 or -P 8) throughput to reveal whether you’re limited by one flow or can exploit multi-core scaling.

Next, bind the iperf3 server to your tunnel endpoint (e.g., 10.0.0.1 with -B on the server) and replicate the same tests through the WireGuard tunnel. Always repeat each measurement in both directions – you can use iperf3’s reverse option (-R) – since tunnel performance can be asymmetric. For UDP, set an explicit bandwidth (-u, -b) and monitor loss to estimate ceiling throughput.

Maintain as much environmental consistency as possible: keep both hosts stable (wired and on the same provider/region), set a fixed test duration, and avoid background load. Pin CPU scaling to “performance” during your runs for stable results.

Result Interpretation

The most important WireGuard benchmark insight comes from comparing baseline vs. tunnel performance, especially with parallel streams. If WireGuard closely matches raw networking on parallel tests, your configuration is tuned for your hardware. If there’s a significant drop, check MTU/MSS (for fragmentation), offloads (GRO/GSO), and per-core CPU saturation before deeper troubleshooting.

WireGuard Performance Tuning Variables

Getting great results isn’t about one magic flag; it’s about fixing a few high-leverage variables in the right order for your WireGuard performance tuning. Use this section as a quick checklist you can apply after your baseline vs. tunnel comparison.

What to tune (and how)

| Variable | Why It Matters | Quick Test | Fix |

| MTU/MSS | Wrong MTU causes fragmentation and TCP stalls | Monitor TCP retransmits during tests | Set MTU in [Interface] (after PMTU test); only if routing subnets, clamp TCP MSS to PMTU on egress |

| Parallelism | Single streams hit per-flow limits; parallel streams use multiple cores | Compare iperf3 -P 1 vs -P 4/8 | Judge capacity with -P 4/8; split bulk transfers |

| CRO/GSO offloads | Batching reduces per-packet CPU cost for UDP tunnels | Check offload status; correlate with CPU usage | Ensure GRO/GSO enabled with ethtool |

| CPU scaling | Crypto is CPU-bound; frequency dips limit speed | Watch per-core usage during tests | Set governor to “performance” |

| System bottlenecks | Old kernels/drivers and poor queue distribution limit scaling | Check kernel version; test if -P scales | Use modern kernels; prefer multiple flows |

Essential Commands

MSS Clamping (for subnet routing):

sudo iptables -t mangle -A FORWARD -o wg0 -p tcp --tcp-flags SYN,RST SYN -j TCPMSS --clamp-mss-to-pmtu Verify Offloads:

sudo ethtool -k eth0 | grep -E 'gro|gso' System Buffers:

# /etc/sysctl.d/99-wireguard.conf

net.core.rmem_max = 134217728

net.core.wmem_max = 134217728

net.core.netdev_max_backlog = 250000 Key insight: WireGuard’s cryptography is fixed (no cipher selection). PersistentKeepalive helps NAT traversal, not speed. Most gains come from MTU correctness, offloads, and parallelism – optimize these before advanced kernel tuning.

Configuring the WireGuard Server Interface

To configure your WireGuard server for performance, start with a minimal setup and systematically add optimizations.

Prerequisites and Key Generation

Ensure your Linux server has wireguard-tools installed (kernel 5.6+ preferred) and UDP port 51820 open. Generate server keys with proper permissions:

umask 077

wg genkey | tee /etc/wireguard/privatekey | wg pubkey > /etc/wireguard/publickey Minimal Server Config

Create /etc/wireguard/wg0.conf:

[Interface]

Address = 10.0.0.1/24

PrivateKey = <SERVER_PRIVATE_KEY>

ListenPort = 51820

# MTU = 1440 (leave unset for auto-selection, or set after testing)

[Peer]

PublicKey = <CLIENT_PUBLIC_KEY>

AllowedIPs = 10.0.0.2/32

PersistentKeepalive = 25 # usually set on the NATed client, not needed on server Replace keys and set a private /24 network.

Firewall and Network Configuration

Proper firewall configuration is essential for WireGuard performance and connectivity. Set up these rules before starting the service.

Basic firewall setup:

# Allow WireGuard UDP port

sudo iptables -A INPUT -p udp --dport 51820 -j ACCEPT

# For internet access through the tunnel, enable IP forwarding and NAT

echo 'net.ipv4.ip_forward=1' | sudo tee /etc/sysctl.d/99-sysctl.conf sudo sysctl --system sudo iptables -t nat -A POSTROUTING -o eth0 -j MASQUERADE Replace eth0 with your server’s internet-facing interface.

NAT and firewall traversal: WireGuard handles NAT automatically. Clients behind NAT don’t need special configuration. If a client needs to receive incoming connections through NAT, add PersistentKeepalive = 25 to the client’s peer configuration – this keeps the NAT mapping active by sending keepalive packets every 25 seconds.

Troubleshooting: If WireGuard connects but clients can’t reach the internet, verify IP forwarding is enabled with sysctl net.ipv4.ip_forward and confirm NAT is working with iptables -t nat -L -v.

Enabling and Verifying

Start WireGuard and verify connectivity:

sudo systemctl enable --now wg-quick@wg0

sudo wg show MTU Optimization

Start simple: Leave MTU unset so wg-quick auto-selects; verify with ip link show wg0.

If setting manually: Find PMTU to your server’s public IP using ping -M do -s <size> <server_ip> (start with 1472), then subtract encapsulation overhead (~60B IPv4, ~80B IPv6). Set that as MTU in each peer’s [Interface], restart, and re-run parallel-stream tests (iperf3 -P 4, both directions).

Final Checks

Confirm handshakes occur with clients and internet routing works as expected. Always benchmark after MTU and firewall changes to measure performance impact. Further information can be found in the official WireGuard Quick Start documentation.

Advanced Performance Concepts

To optimize WireGuard beyond basic configuration, focus on extracting the final 10-20% performance gains through systematic kernel and hardware optimizations. Make incremental changes and measure after each adjustment.

Offload-Aware Tunneling

WireGuard benefits significantly from kernel batching features (GRO/GSO) that reduce per-packet CPU overhead. Ensure these offloads are enabled on your underlying NIC and avoid disabling them except for debugging:

# Verify offloads are active

ethtool -k eth0 | grep -E 'gro|gso' Modern drivers (virtio-net, ENA, gVNIC on VMs; Intel/Broadcom on bare metal) support these features. Traffic shaping can help with latency but typically reduces peak throughput.

Parallelism and CPU Scaling

Single flows often bottleneck on one CPU core or RX queue. Use multiple parallel streams for bulk transfers – your benchmarks should reflect real-world usage patterns. On bare metal servers, proper IRQ distribution and multi-queue NICs matter significantly. Even in VMs with limited RSS, parallel streams still improve performance.

If iperf3 -P 4 doesn’t scale linearly, check for CPU cores at 100% utilization or single queues handling all traffic.

CPU and System Optimization

WireGuard is CPU-bound despite its efficiency. Maintain stable clock speeds using the performance governor during testing:

# Set performance governor

echo performance | sudo tee /sys/devices/system/cpu/cpu*/cpufreq/scaling_governor On multi-socket NUMA systems, keep network interrupts and WireGuard endpoints on the same socket to avoid cross-node penalties.

Kernel and Driver Improvements

Newer kernels provide better UDP segmentation and receive path optimizations. Keep systems current and prefer:

- In-kernel WireGuard (kernel 5.6+) over userspace implementations

- Multi-queue enabled drivers

- Modern NIC firmware

TCP Performance Inside Tunnels

Most application traffic is TCP carried inside WireGuard’s UDP tunnel. Lower latency and fewer drops help TCP avoid retransmits. Consider BBR congestion control on both endpoints for long-distance or lossy paths:

# Test BBR (validate performance impact)

echo 'net.ipv4.tcp_congestion_control=bbr' >> /etc/sysctl.conf Bottom line: Once MTU/MSS and benchmarking are optimized, focus on intact offloads, genuine parallelism, stable CPU performance, and modern kernel/driver stacks. Measure each change individually.

WireGuard Performance FAQ

How does WireGuard work?

WireGuard creates encrypted peer-to-peer connections between devices using public key authentication. It operates inside the Linux kernel for maximum speed and efficiency, unlike older VPN protocols that require more overhead.

Does WireGuard use TCP or UDP?

WireGuard uses UDP only. That choice keeps overhead low and lets the kernel apply batching/offloads (GRO/GSO) for high throughput. If a network blocks UDP, you can tunnel WireGuard inside TCP/HTTPS via generic wrappers, but you should expect extra latency and possible slowdowns. For performance, native UDP is strongly preferred.

How do I check if WireGuard is working?

To check if WireGuard is working, run the wg command on either connected device and look for a “latest handshake” timestamp along with increasing transfer counters. These confirm that the peers have successfully exchanged keys and are passing traffic. You can also verify the connection by pinging the peer’s WireGuard tunnel IP address, such as 10.0.0.1. For a quick performance check, run the iperf3 test through the tunnel using multiple parallel streams (e.g., iperf3 -P 4) and compare the results to a test without the VPN. If you notice timeouts or slow speeds, ensure that your firewall permits UDP traffic on port 51820, that both peers have matching AllowedIPs configurations, and revisit your MTU and MSS settings to rule out misconfiguration.

What MTU should I use with WireGuard?

Start by leaving MTU unset – wg-quick picks a route-aware value. If you set it manually, derive it from measured PMTU (typical starting points: ~1440 for IPv4, ~1420 for IPv6 on 1500-byte links), then re-test.

Why is my WireGuard speed lower than baseline?

Three common culprits: incorrect MTU/MSS (fragmentation/retransmits), disabled GRO/GSO offloads, or a single-flow ceiling (fix with parallel streams, e.g., -P 4/8). Re-run the baseline vs. tunnel matrix from the benchmarking chapter to pinpoint the bottleneck.