Did you notice that our servers had more issues more often in late 2024? We did too! The servers were occasionally freezing, sometimes coming back to life by themselves and sometimes requiring a reboot to recover. The strange part? Our monitoring didn’t show any obvious cause of these freezes.

Typical server issues have obvious causes – a problematic customer application, faulty network or virtualization setting, or hardware issues. This was different. The usual diagnostic steps did not indicate a clear root cause. At the same time, our infrastructure stability was getting worse and worse.

We realized we need more than just routine troubleshooting. We put together a specialized task force, bringing in not just our own experts but also external kernel developers and specialists from software providers like Virtuozzo. If the obvious answers weren’t right, we’d need to dig deeper – much deeper.

The Hunt for Answers

Some technical problems announce themselves loudly. Others, like this one, hide in plain sight. Our task force began by examining every possible angle – different hardware brands, various data centers, user profiles, workloads. Nothing seemed to point to a clear culprit.

About 20% of our support tickets mentioned server configuration issues, a number that caught our attention. Usually, configuration problems show clear patterns. But these tickets described various symptoms leading to the same result: unresponsive servers needing reboots.

Testing Theories

We tested hypotheses about hardware. Could it be specific to certain server brands? The problems appeared across Lenovo, Dell, and HPE systems alike, and weren’t related to hard drive issues either. Maybe particular data center locations? No pattern there. We looked at different OS and virtualization software versions, but there was also no clear cause. Even when we analyzed how different customers used their servers, we couldn’t spot any meaningful patterns.

The first breakthrough came when we changed the Proxmox and the Linux kernel version we use on our vhost servers. Stability improved overall, but now the servers would start having completely different performance issues. Our task force kept digging, examining memory management patterns.

Understanding Memory Management

The Memory Dance

Before continuing with our story, let’s explain what memory management is.

In production environments, memory management is a careful balancing act. The CPU constantly orchestrates a complex dance, moving data between RAM and swap. Active applications stay in fast RAM, while inactive ones move to slower swap space based on usage patterns.

This coordination happens thousands of times per second, usually without anyone noticing. It’s an industry standard used in cloud computing for decades. This RAM-plus-swap approach handles everything from small WordPress sites to busy databases and beyond.

The ZRAM Promise

Enter ZRAM – a standard Linux kernel feature designed to make memory management even more efficient. By compressing data right in RAM itself, it offers 25% more capacity. Think of it like this: while swap is used to keep less-used data outside RAM, ZRAM compresses the data to keep more of it in RAM.

Uncovering the Real Culprit

Now that we’ve got some context, let’s return to our investigation. Over time, while fixing some stability issues through system updates, we introduced ZRAM across our infrastructure. At first, everything seemed fine. Then our monitoring tools started picking up unusual patterns in input/output operations.

The problem wasn’t immediately obvious. Servers would run normally until they hit a specific memory condition. The critical moment came when systems reached full capacity and needed to handle both ZRAM and swap space simultaneously. We discovered that running physical RAM with swap worked fine, as did physical RAM with ZRAM. But when both compression and swap were enabled, the server freeze issue occurred.

Our debug logs and data dumps revealed the full story. The kernel-level debugging showed that when both RAM (compressed with ZRAM) and swap reached capacity, the handover between them didn’t work correctly. Instead of smoothly moving data between compressed RAM and swap space, systems would freeze completely. The latency patterns in our debugging dumps pointed to a fundamental issue with this memory management approach.

By December 2024, we had enough evidence to make a decisive move. We deactivated ZRAM across all systems. The impact was immediate and clear: those mysterious input/output issues disappeared. More importantly, the mysterious system freezes that had frustrated our customers and ourselves reduced dramatically.

Mission accomplished.

We hope that the insights we’ve gained about ZRAM behavior can help other providers facing similar challenges.

Looking Forward

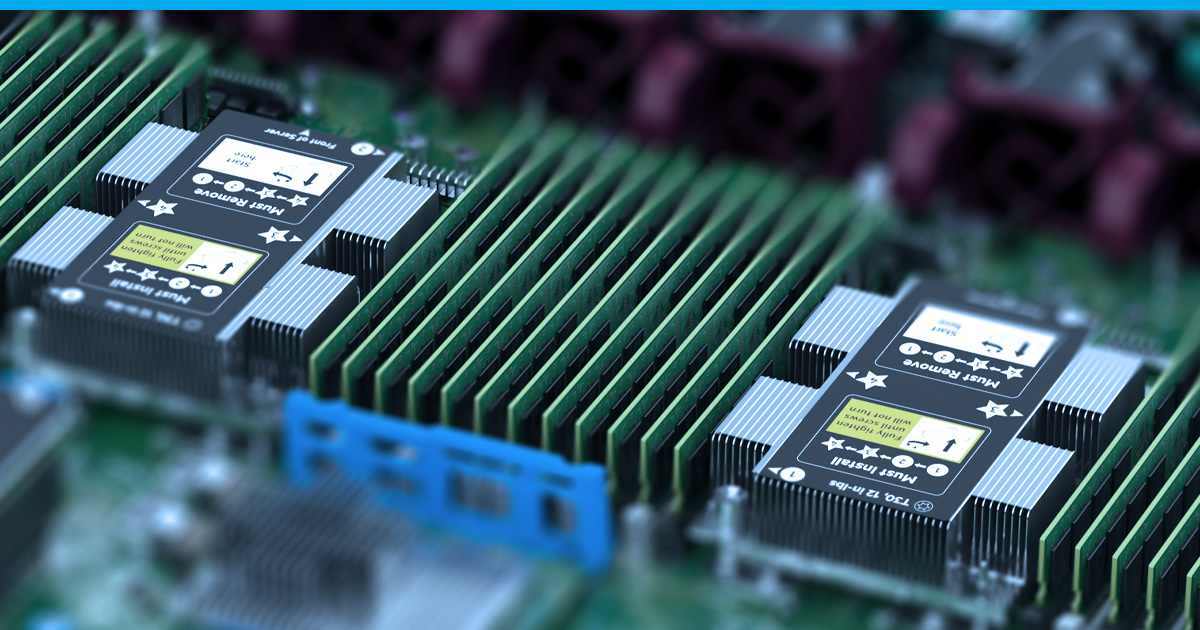

Maintaining server infrastructure stability is a continuous task. While deactivating ZRAM solved the degraded performance we faced in 2024, we’re continuing to investigate other ways to provide an even more stable environment. We’re also improving our infrastructure: we’re upgrading our vhosts fleet to new AMD Turin processors, the latest CPUs, which offer even more efficient memory handling.

While we can’t promise you’ll never face any downtime, we can promise we’ll keep optimizing and improving so that your workloads run smoothly 24/7, 365 days a year.