Introduction

The topic of backups is more important today than ever. Every day, massive, professionally executed attacks on companies occur with the aim of encrypting data and demanding ransom money. It is not uncommon for data to be deleted directly with the aim of causing as much damage as possible. In such situations, it pays off to proactively get your backup infrastructure up to speed and prepare for such scenarios. This article describes the setup and optimization of such an environment in conjunction with Veeam Backup & Replication from Veeam Software.

Veeam as Backup Software

Those who know me know that I have been a big fan of Veeam Software and its products for years. With the release 6.5 the support for Microsoft Hyper-V was introduced, since then I use Veeam almost daily. The software is one of the very few examples on the market where a manufacturer puts so much work and time into the quality control of his product. Of course, there are always minor problems with new versions, but I have never had so many problems after installing a new version that I had to reset the software or that there was data loss with older backups. Many functions within the software, which can now be used in other products, were first developed and brought to market by Veeam. One of these examples would be the “Instant Recovery” feature, which allows you to restart one or more VMs from the backup within minutes. We will go into more detail about this feature later in this article because it can play a key role in disaster recovery.

If your company uses different backup software, this article may still be of interest to you, since many of the topics addressed are general in nature and are also used in other products. But since I primarily work with Veeam Backup & Replication, this article primarily refers to this software.

A closer look on the backup storage

There are a few things to consider when choosing a backup storage. Often a certain number of hard disks is combined to a RAID array and then used as a backup target. Depending on the size of the environment and the amount of data, this can be a NAS storage, a Dedicated Server or one or more SAN systems. This is exactly where I would like to start and discuss the different possibilities for optimization.

At Contabo you can rent one or more servers, equip them with the desired amount and size of storage space and Raid to treat them basically as backup servers.

The size of the backup storage

The need for backup storage is influenced by many factors. These include the current size of the data, the number of storage points, the daily delta, the annual growth of the data and much more.

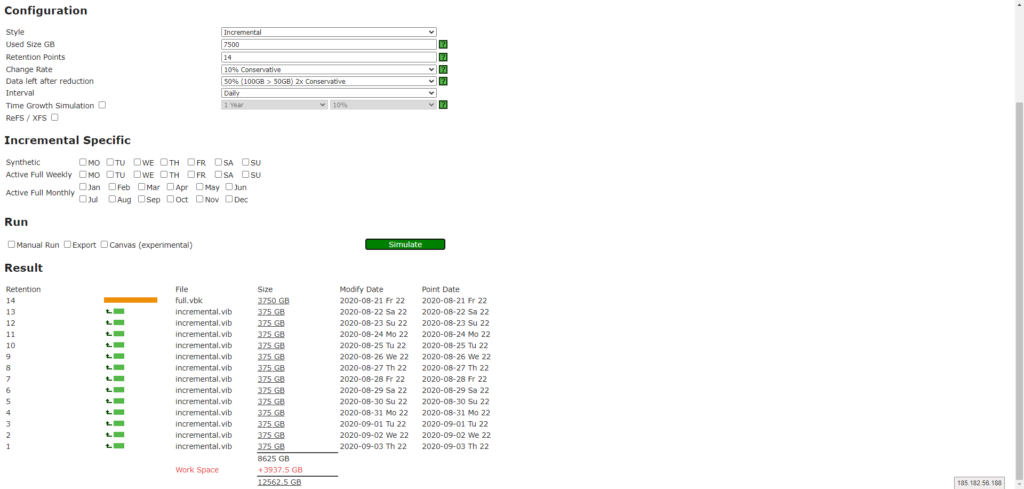

To be able to plan the need as realistically as possible, Veeam gives us a few tools. One of these tools is the “Retention Point Calculator”, which is available online at https://rps.dewin.me/ The calculator can be fed with data and will throw out the required amount of memory. You can also calculate different types and combinations of backups, e.g. 14 daily and then other strategic times every week, every month, etc.

Time needed

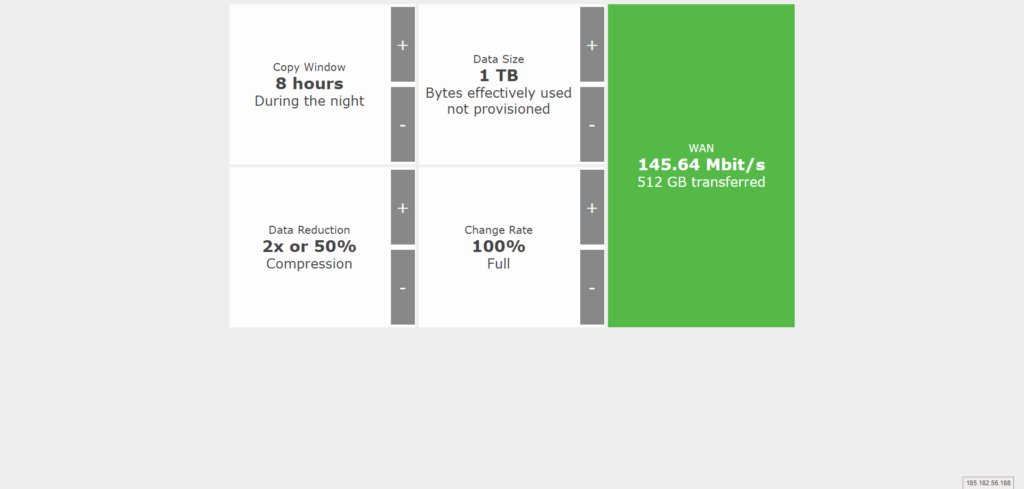

It is not uncommon for a backup to be finished within a certain time-frame so that it does not affect an active workload or overload network paths and negatively impact daily operations. There are several things to consider. One would be the required bandwidth, which must be available. There is also a simulator for this, which can be accessed at http://rps.dewin.me/bandwidth/.

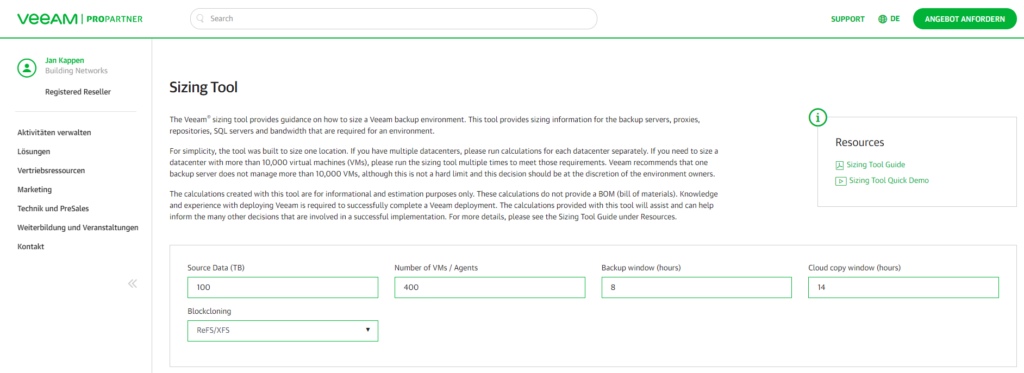

The larger the infrastructure, the more data needs to be moved. You need CPU power, memory and network bandwidth. This can also be planned in advance, but the tool is now only available via the Veeam main page after logging in: https://propartner.veeam.com/vm-sizing/

The combination of storage

When planning a backup, it is no longer uncommon for different types of storage to be combined in order to combine the best features. Flash memory in the form of SSDs or NVMes is used for speed, while classic hard disk drives provide a lot of storage space. Alternatively, block memory can also be added, we will discuss this topic later in this article.

Flash memory for my backup?

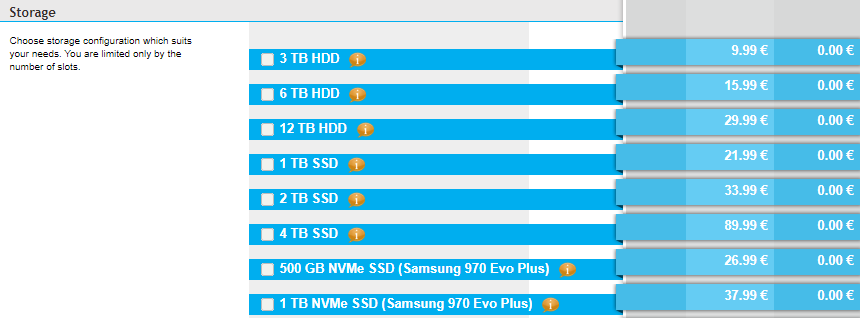

The prices for SSDs and NVMe media have continued to fall in recent years and are now at a level where they can be used as short-term storage for backups. For example, in Contabo SSD and NVMe storage are only 6.8x and 15.2x more expensive than regular HDD storage. Short-term means that the backups are only stored on this fast storage for a few days before being moved to slower storage. However, this ensures that restores can be completed much faster, because the used storage is simply significantly faster than rotating spindles.

At Contabo, you can choose between 500 GB or 1 TB NVMe Disks, depending on your requirements. As of October 2020, the prices are the following:

Veeam Instant Recovery

The “Instant Recovery” technique allows you to start VMs directly from the backup storage. This allows you to get virtual servers up and running again within minutes without having to transfer all data back to the server or SAN system beforehand. Depending on the size of VMs, this can save you several hours or even days of downtime.

Flash memory ensures that you can start more virtual servers at the same time from the backup without performance getting so bad that it is no longer possible to work with the system. Here, the type of disk, number and size has a great influence on the amount of VMs that can be brought online using “Instant Recovery” technology. The optimal size should be discussed and calculated during the planning phase. Here it also plays an important role which virtual servers you really need in an emergency and which systems are secondary or do not need to be recovered within a short time. The fewer VMs you have to restore in a time-critical manner, the smaller this memory area can be.

Downstream storage – large and inexpensive

Experience shows that the older the backup is, the less likely it is that a recovery will be necessary. We take advantage of this and move the backups after some time automatically to a storage area that does not consist of SSDs/NVMes. If it is a normal hard disk, you get a lot of storage space at a very attractive price and can keep the backups for weeks, months or even years. It is also possible to use special dedup storage, where the data is automatically deduplicated. In this way, even with relatively little storage space, backups can still be saved or archived for years.

Of course, this would be much slower than with flash memory, but since it becomes more and more unlikely with increasing backup age that data must be restored, this is tolerated in most cases. If you can recover a large file from nine months ago to a colleague and it takes two hours instead of 15 minutes, he’s probably happier about that than if you have to tell him that recovery is not possible because there is not enough space for nine months of backups.

The Demo Environment

I show the setup within Veeam with some screenshots in my demo environment. There are several servers, here a short description of the systems and their equipment.

Veeam Management Server

The management system is the primary server. A Windows Server 2019 with Standard Edition is installed on the system including a graphical user interface. Veeam Backup & Replication is installed in the latest version 10.0.1.4854. The system is used for administration, no other VMs, roles or services run on the server itself. The server itself has only one RAID1 for the operating system, no other disks.

Contabo Outlet Servers are very well suited for such a configuration, as they are inexpensive and do not demand too much of the system’s performance. You should only make sure to activate the upgrade to 1 Gbps, 100 Mbps is no longer appropriate, even for a Veeam Management Server.

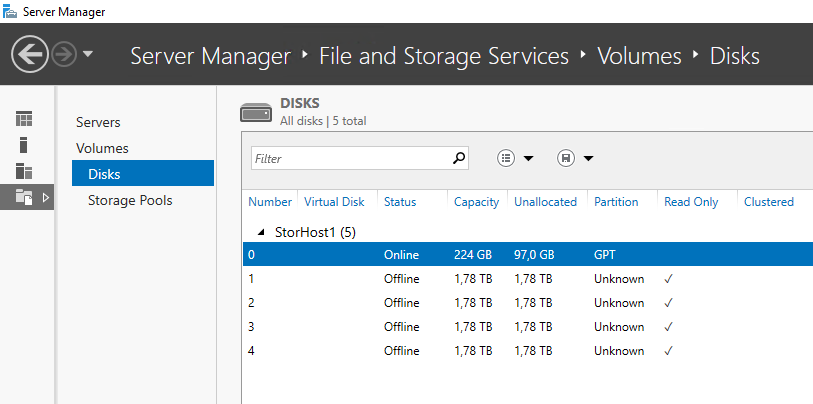

Veeam Storage Host 1

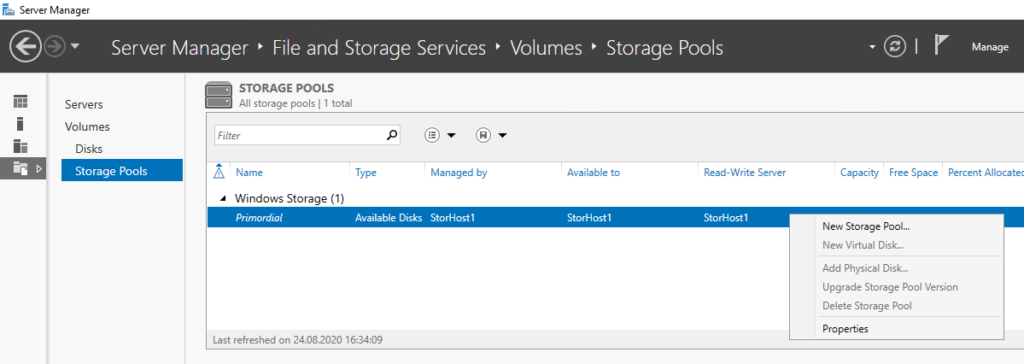

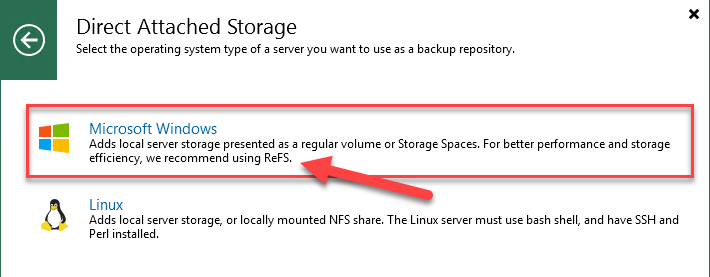

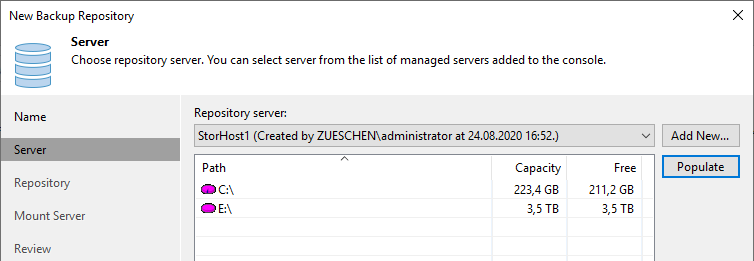

This hardware system contains two hard disks in RAID1 for the (Windows Server 2019 Standard) operating system and four NVMe volumes. Since the NVMe disks are not operated on a RAID controller, they are combined in the operating system to form a storage pool using Storage Spaces technology.

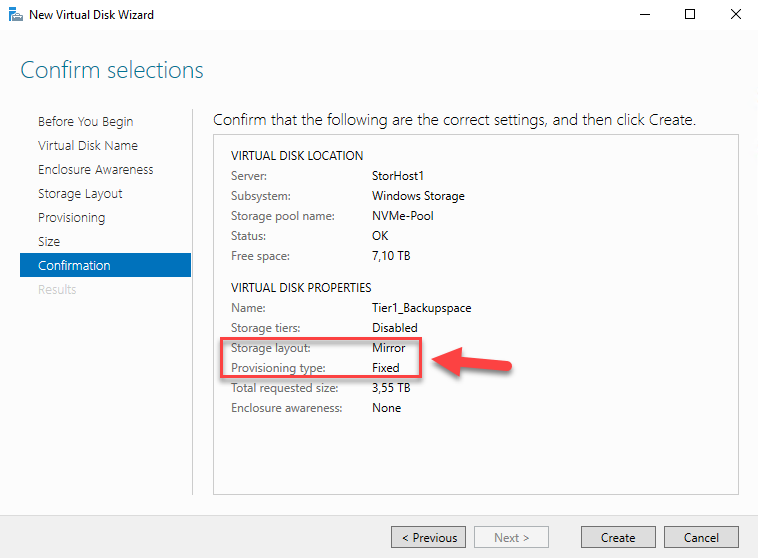

The disks are combined into a storage pool, based on this pool a volume is created. We make sure that “Mirror” is selected as Layout and the Provisioning Type is set to “Fixed”. This guarantees the best performance paired with reliability. It would be very annoying if our important backup data would be directly and irrevocably destroyed when one of the volumes fails.

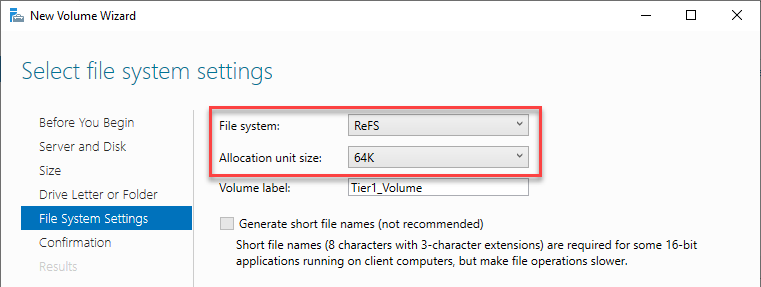

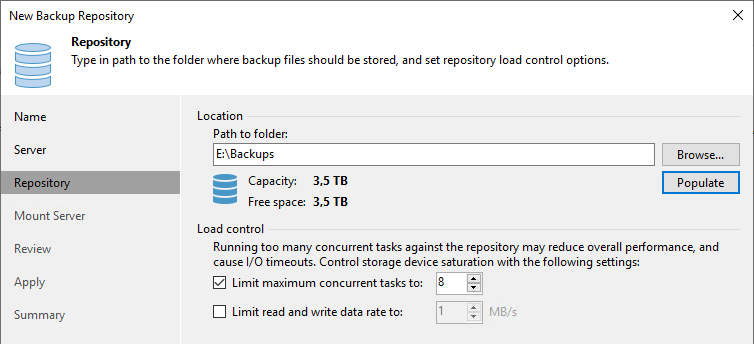

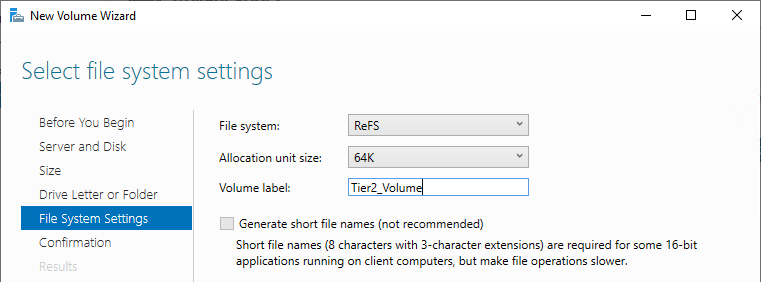

To be able to use the newly created disk, we need to put a file system on the volume. ReFS is a good choice here. Veeam works very well with ReFS and benefits from features like block cloning. Also make sure to set the Allocation Unit Size to 64K instead of leaving it at the default value.

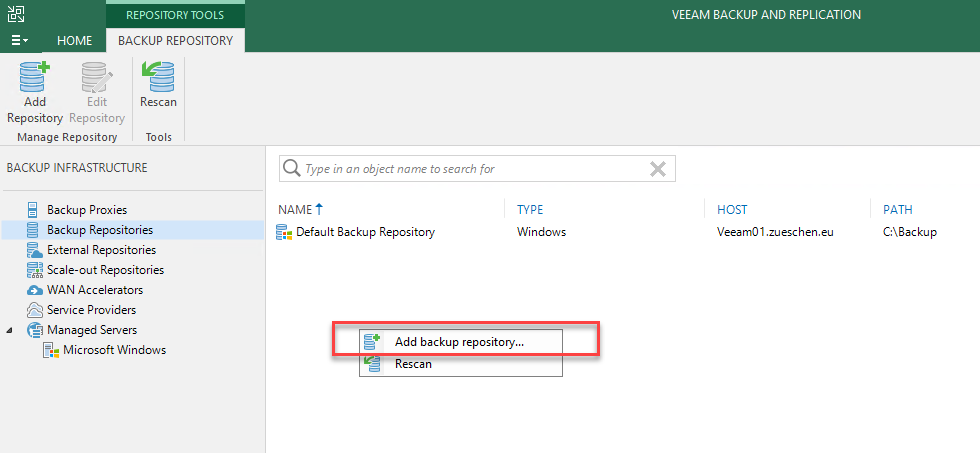

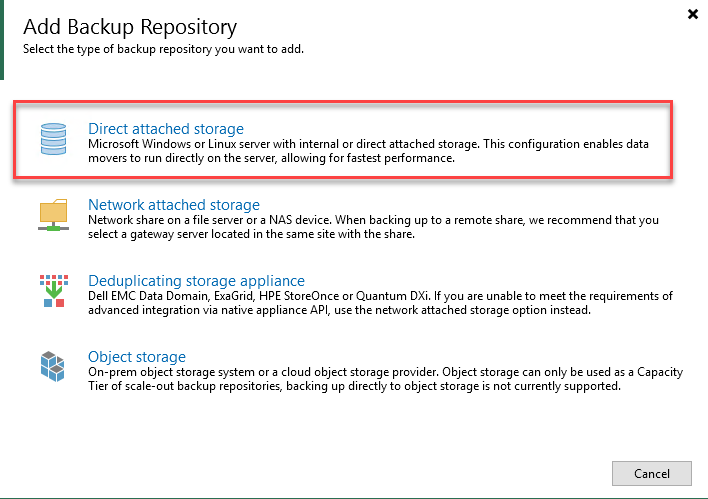

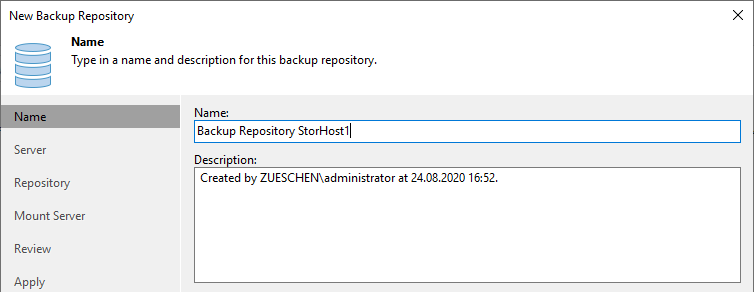

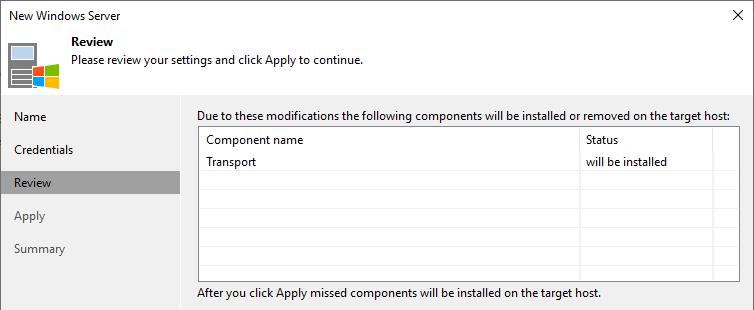

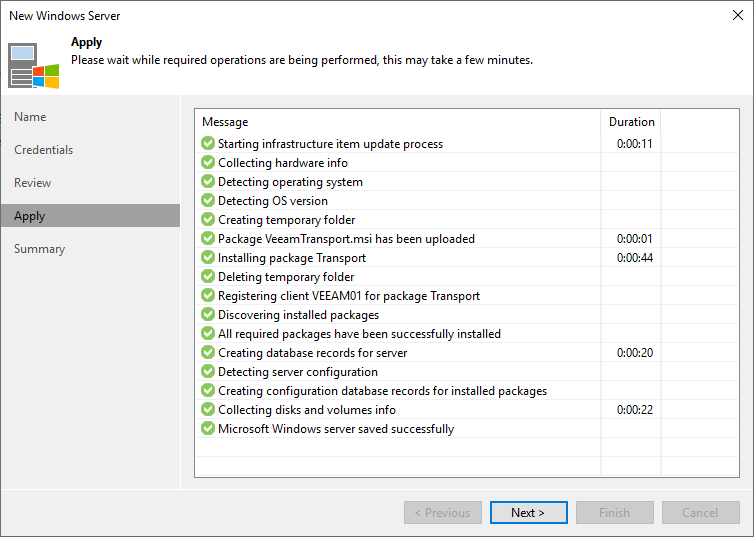

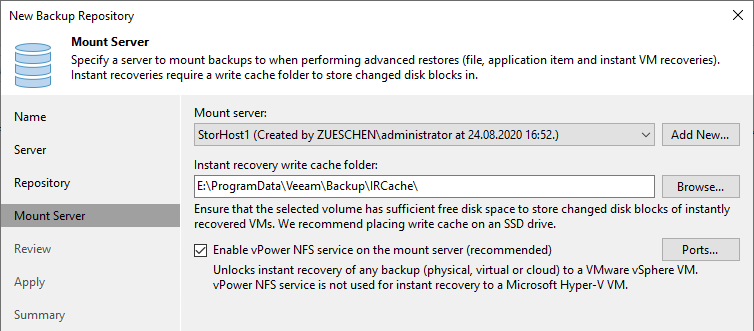

Now the server can be added as a backup repository via the Veeam console.

Contabo’s 32-core AMD Dedicated Server is recommended for that purpose. Here you have the possibility to configure SSDs and/or NVMes in addition to normal hard drives. Furthermore, the 10 Gbps Add-On can significantly increase the network bandwidth, which means more bandwidth in the backend and thus a reduction of the backup duration.

Even if you don’t have the need for 32 cores, this system can handle much more disks than smaller systems. This makes it easy to grow in the future without adding an additional system or migrate all the existing data to a new system.

Veeam Storage Host 2

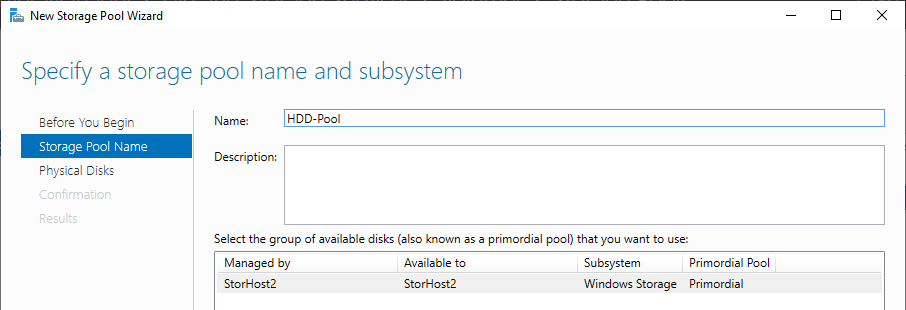

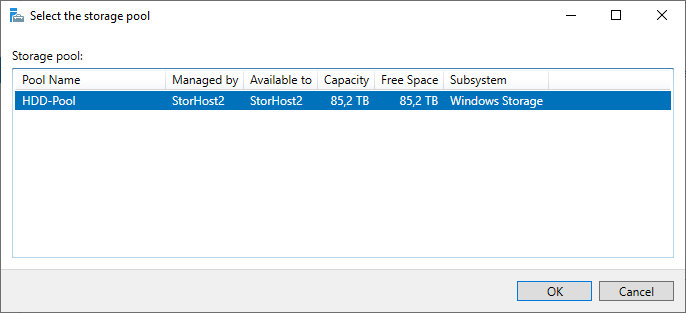

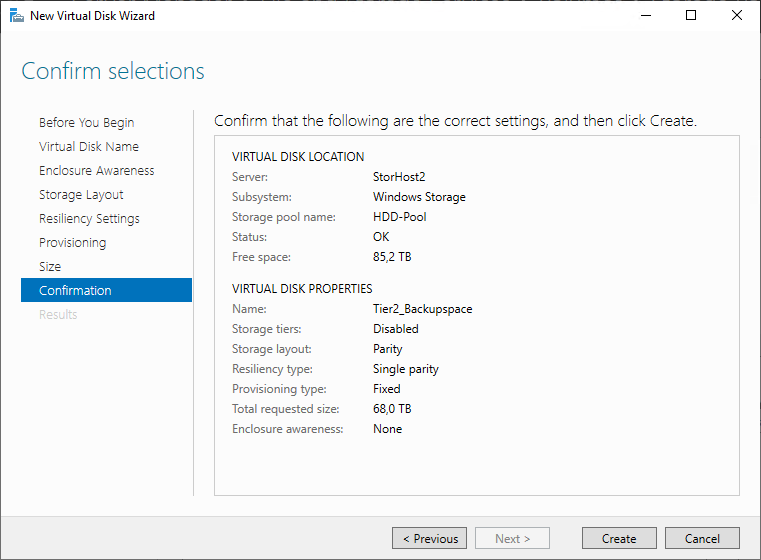

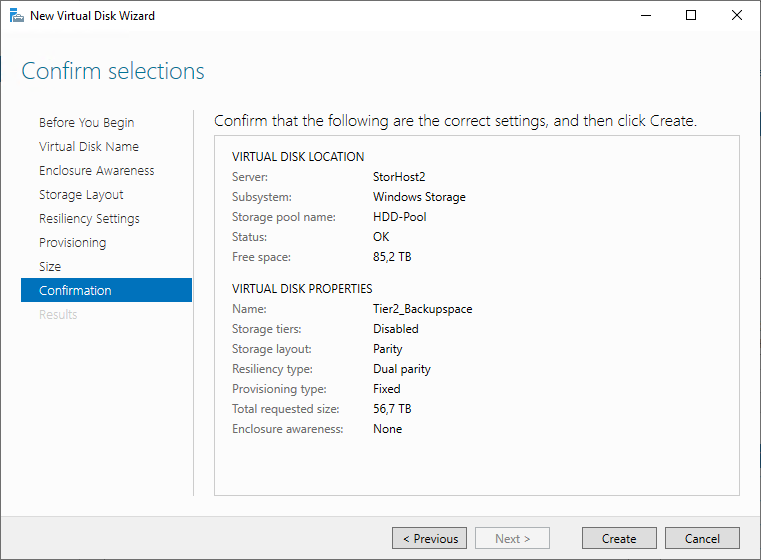

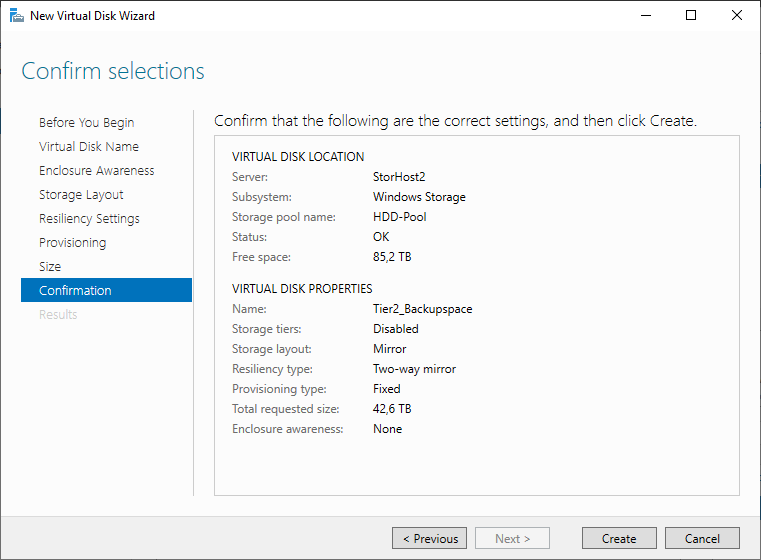

The second system for storing our data is also a hardware system. Two small hard disks are installed in RAID1, on which a Windows Server 2019 in Standard Edition is installed. For storing and archiving the backup data, there are currently eight hard disks, each with 12 TB of storage capacity available. You are free to choose whether you want to use a classic RAID controller or also rely on Storage Spaces technology. In my case, I don’t have a RAID controller, so I combine all disks into a storage pool to create a virtual disk.

I also use a mirrored disk here to have a small speed advantage over parity. If this is not important, the usable amount of storage space can be further increased by “Single Parity” (like RAID5) or “Double Parity” (similar to RAID6).

The volume is also formatted with ReFS and 64k blocksize for the reasons mentioned above.

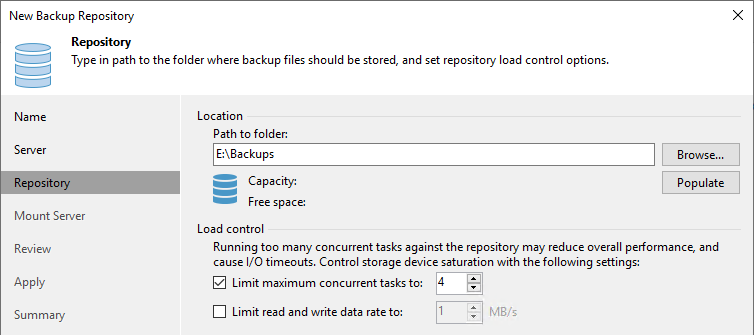

Now the storage in Veeam is added as a backup repository, I take care at this point that the number of parallel streams is less than with the NVMe volume.

For the reasons already mentioned, a 32-core AMD Dedicated Server is also recommended here.

Hyperv01

This is our Hyper-V host on which the virtual systems are operated. Not only a single host can be used as a backup source, but also other single hosts, one or more failover clusters, VMware Sphere or Nutanix. The number of servers is almost unlimited, you only must consider the amount of resources needed for the Veeam infrastructure.

Here you must select one or more servers of the “Dedicated” series that meets your requirements. These may be more in the direction of memory, in the direction of CPU or you may need a lot of IO performance, depending on the type and amount of software used. No general statement can be made here, this must be clarified in advance.

The network bandwidth issue

The network connection for the Veeam Management Server does not need to have too much bandwidth, the system can generally work with a minimum of 1 Gbps. This is because there are no bandwidth-intensive processes on this server, but “only” all tasks are delegated from here.

The other systems, i.e. the storage servers and the hypervisor, should have the highest possible bandwidth. It often makes sense to set up a dedicated backup network in order to have the highest possible bandwidth available and to avoid stressing or even disturbing the production network. Bandwidths of 2x 10 Gbps are mandatory here, I usually even directly recommend the installation and use of 25 Gbps. The price difference between 10 and 25 Gbps is almost non-existent, so it almost never makes sense to work with 10 Gbps cards. The need can’t be clarified in a generalized way, but in principle the following applies: The more hypervisor in use, the higher the need for bandwidth and the more you should plan the installation of a dedicated backup network.

If you need such a configuration, please contact Contabo support. Special requests can be attached and implemented here.

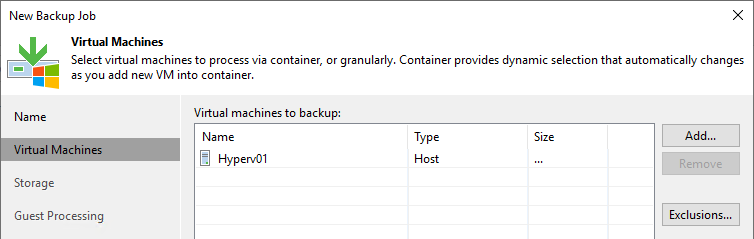

Integration in Veeam

After all servers have been installed, configured and added to Veeam, we can now start with the setup. For this we add our Hyper-V host in the backup job. The inclusion of the entire host means that basically all VMs on the system are backed up, even if new ones are added.

When using more than one backup storage, there is more than one way we can automate this. However, this depends heavily on the type of backup storage that is used. For the setup used here, the following variant must be used by means of a backup copy job, since we are working with two “normal” ReFS volumes.

The use of a backup copy job

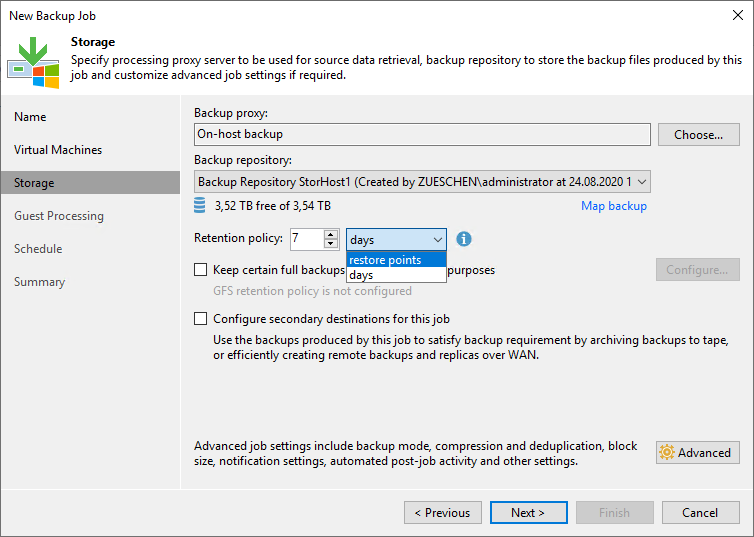

With this type of additional archiving, the first step is to create a new backup job. As backup repository the NVMe store is now selected, in my case this is the StorHost1. Now I define the maximum number of backup points or alternatively the maximum number of days my backup may be stored on this storage.

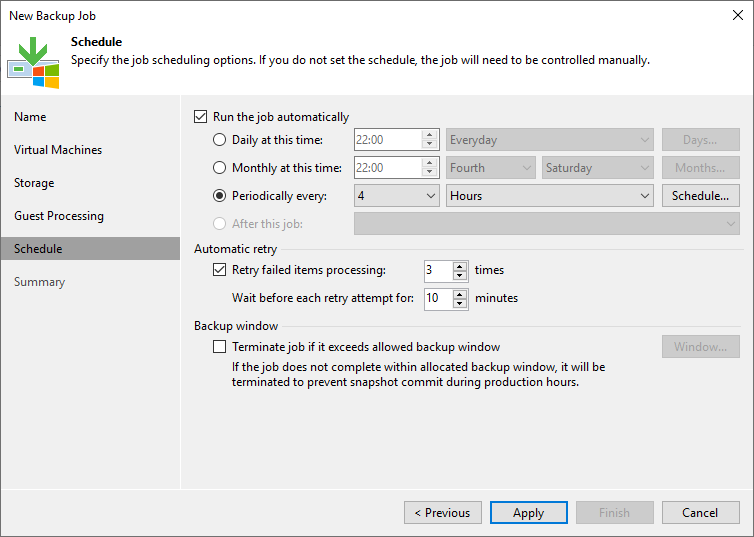

I configure 7 days to be able to save a full week. I do not configure a second target in this step, this is only possible after the primary job has been created. I will not go into the advanced settings at this point, this can be done as needed. Under “Schedule” the interval in which backups are created and saved must be set. This is completely dependent on your needs, just make sure you consider the amount of NVMe memory you have available. Too many backups create too much data and may blow up the storage.

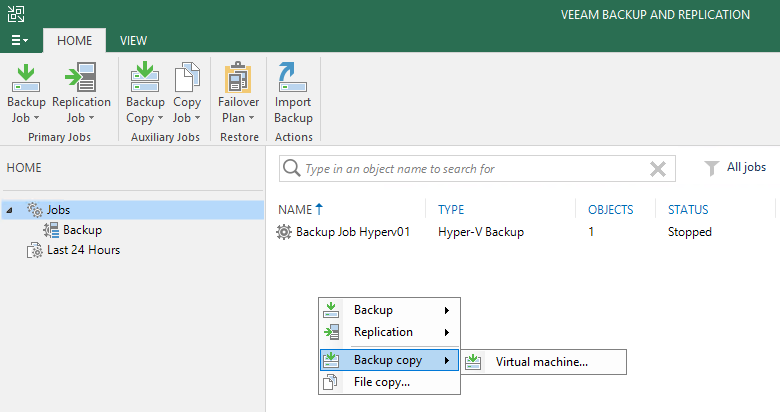

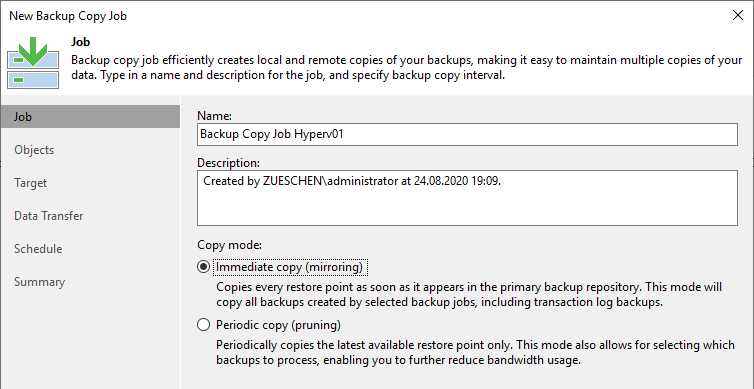

Once the primary job has been created, we can now make a copy of the data to the HDD storage. To do this, we now create a “Backup Copy Job”.

Here we can choose whether the copy is made immediately after the backup job is completed or at a defined time.

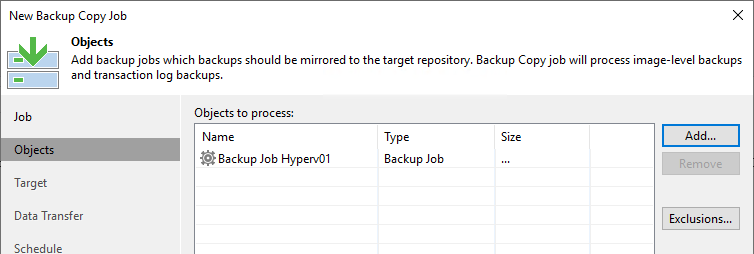

As source we can now specify our just created backup job.

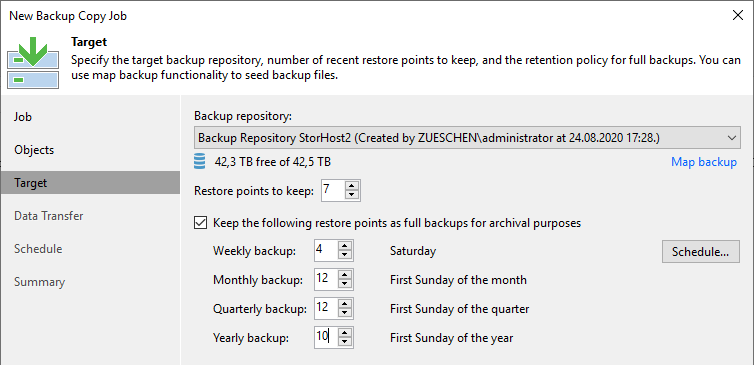

As target for the backup data we now must use the StorHost2 with the HDD storage. In addition to the normal restore points we can now store more backups at this location, as shown in the following screenshot.

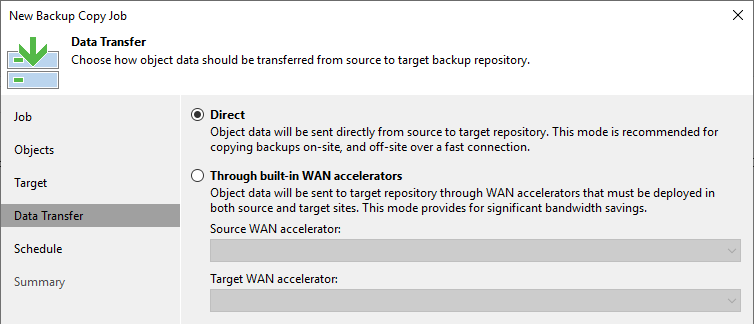

If desired, we can send the data through a WAN accelerator during the transfer, but this is not practical in our case, since the storage systems are connected directly over 10 Gbps or more in the best case.

If we have enough performance in the backend or even our own backup network, we can run the transfer between StorHost1 and StorHost2 smoothly at any time. If there are possible bottlenecks, we should limit the communication to a time in the evening or at night. However, this is contradictory to the option “immediate backup mirroring”, which brings us back to our own backup network.

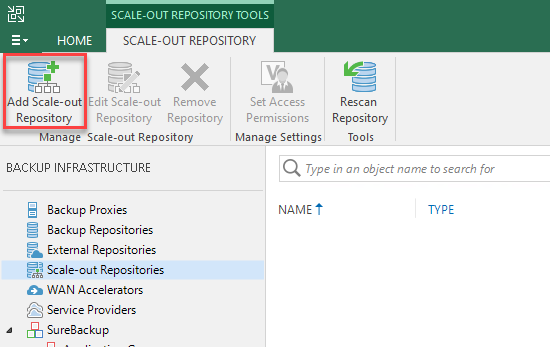

The use of a scale-out backup repository

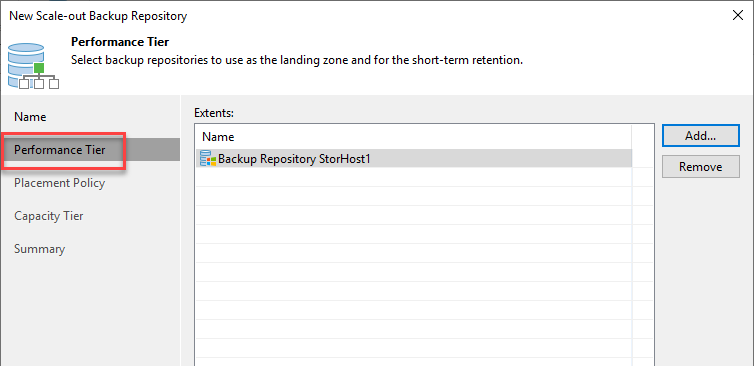

The second option is a scale-out backup repository. With this technique two types of repositories are linked together. The setup is also done via the Veeam Management Console.

After we have assigned a name, the first step is to select the “Performance Tier”. In my case this is the NVMe storage in StorHost1.

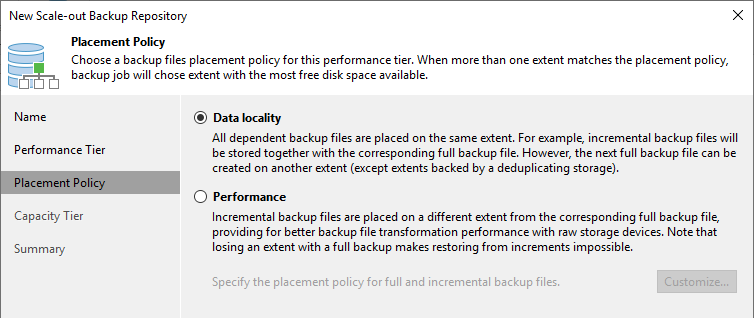

Now you can choose whether the backup data of a VM is always stored on the same memory or if it should be as fast as possible and the data is stored “somewhere”. It doesn’t matter if there is only one repo in the performance tier, this setting is only relevant for two or more repos. The advantage of the “Data locality” setting is that the backup data of a VM is always stored on the same storage and is therefore either completely available or not available at all if the backup storage is not available. With the “Performance” setting, the data of the backup chain is always stored on the repository that is currently free and available. If a backup storage is not available, it is possible that a part of the backup data is not available and therefore a recovery will not work.

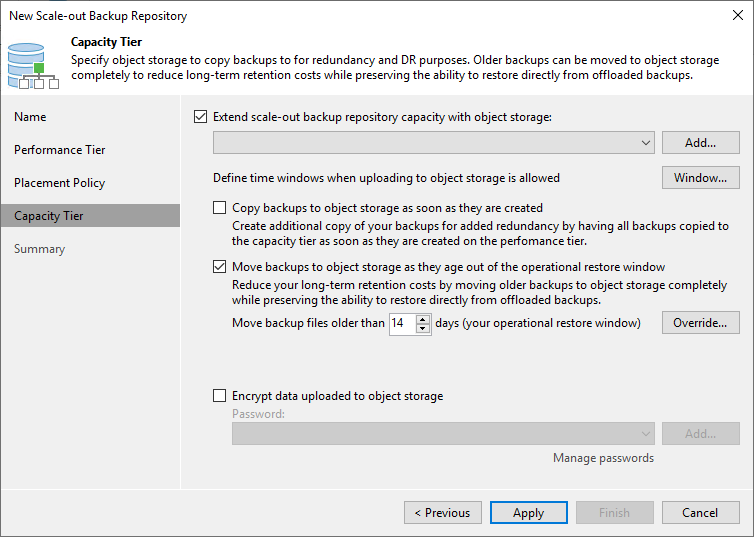

Under “Capacity Tier” we can now select the downstream storage to which the data will be moved after a defined period.

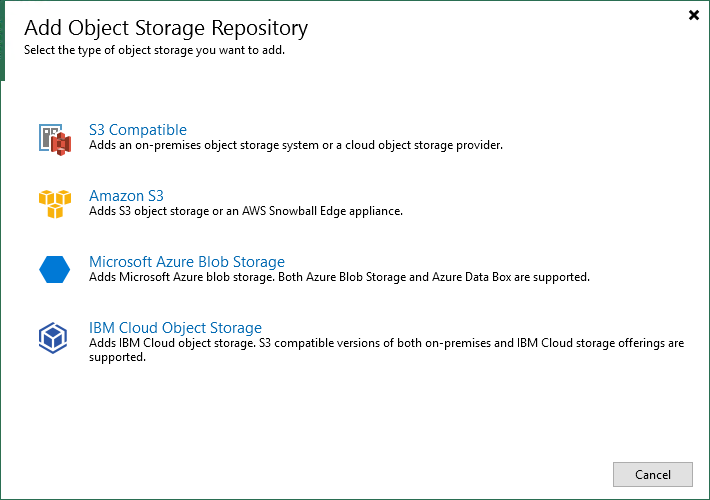

If we select this option, we must specify an object memory. If none is available yet, we can add it using “Add”. A click on this option shows the available memory types.

We see here that a classic memory, such as our StorHost2 with many hard drives, is not supported.

I use this feature with some customers and with myself to move the data to an Azure Blob Storage. Depending on the duration of the storage and the size of the backups, the costs for this are kept within reasonable limits and there is no need to create a separate object storage. If this is not possible or not desired, own object storage can be added if required. There are several manufacturers of storage systems on the market that offer S3-compatible storage that could be used at this point.

Differences and dependencies

As we have seen in the previous two chapters, both types of setup have dependencies on the hardware used. Servers with internal disks as well as NAS and SAN storage cannot be included as a “capacity tier” in a scale-out repository. If this type of storage is used, a traditional backup copy job must be used. It is important to note that there is basically no disadvantage to using the backup copy job variant, it is just a different type of setup.

On the other hand, if you have the option of working with a scale-out repository, this allows you to use object storage. Among other things, this can ensure that data in this memory cannot be deleted deliberately or accidentally, since every object has write protection and thus cannot be overwritten by ransomware or human error.

Please note that a scale-out repository requires at least the Enterprise Edition of Veeam. Here you can add a maximum of two normal repositories, only in the Enterprise Plus Edition you can add and use any amount of memory.

Result

With the help of Veeam and different types of backup storage, many advantages of the respective storage types can be combined. Fast storage in the form of SSD or NVMe provide an incredible performance boost during a recovery and significantly increases the amount of VMs that can be booted from backup when recovering data via “instant recovery”. Cheaper, but significantly slower storage in the form of hard disks provide enough space at a correspondingly low price per GB, so that your data can still be stored for several months or years.

In combination with object storage, the transfer of data to a cloud provider can be simplified and automated. Alternatively, an S3-compatible storage can be set up in your own company to effectively protect data from ransomware or unauthorized access.

By setting up a site-to-site VPN between your environment and the Contabo Data Center, you have the possibility to access your data at any time and to duplicate it to your storage by means of a backup copy. In this way you always have a copy of your data. Alternatively, you can mirror the data to another Data Center to have a geo-redundant mirroring.

Comprehensive and clean planning in advance allows you to get the best out of your environment, minimize backup times and keep the burden of backups during day-to-day business as low as possible.